1 集群环境实践¶

1.1 单主集群¶

1.1.1 基础环境部署¶

学习目标

这一节,我们从 集群规划、主机认证、小结 三个方面来学习。

集群规划

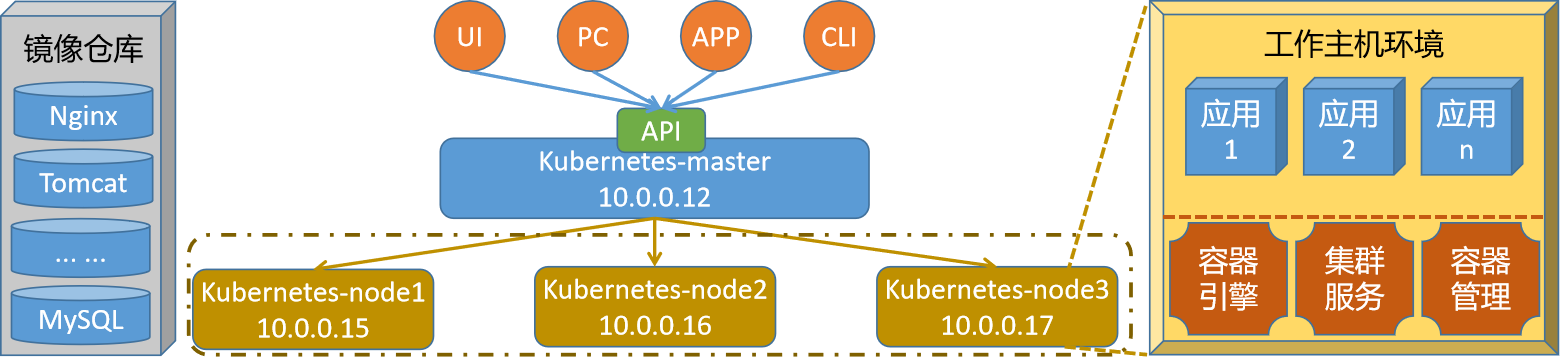

简介

在这里,我们以单主分布式的主机节点效果来演示kubernetes的最新版本的集群环境部署。

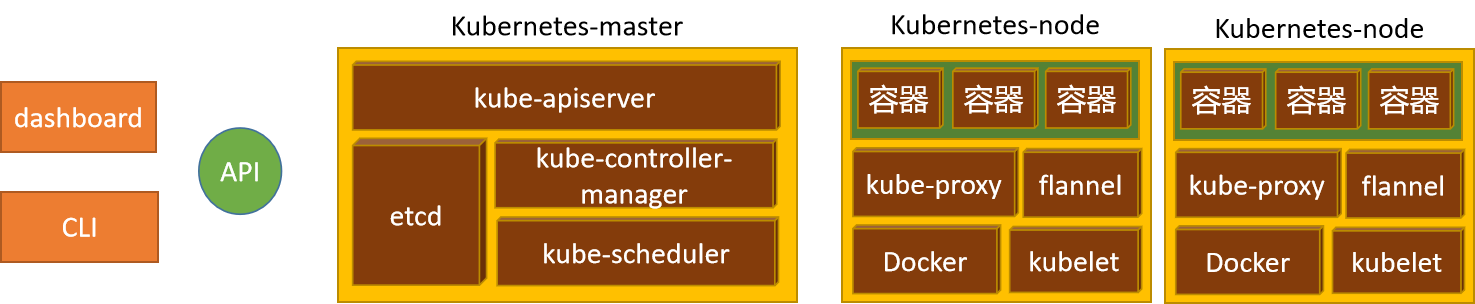

节点集群组件规划

master节点

kubeadm(集群环境)、kubectl(集群管理)、kubelet(节点状态)

kube-apiserver、kube-controller-manager、kube-scheduler、etcd

containerd(docker方式部署)、flannel(插件部署)

node节点

kubeadm(集群环境)、kubelet(节点状态)

kube-proxy、containerd(docker方式部署)、flannel(插件部署)

主机名规划

| 序号 | 主机ip | 主机名规划 |

|---|---|---|

| 1 | 10.0.0.12 | kubernetes-master.superopsmsb.com kubernetes-master |

| 2 | 10.0.0.15 | kubernetes-node1.superopsmsb.com kubernetes-node1 |

| 3 | 10.0.0.16 | kubernetes-node2.superopsmsb.com kubernetes-node2 |

| 4 | 10.0.0.17 | kubernetes-node3.superopsmsb.com kubernetes-node3 |

| 5 | 10.0.0.20 | kubernetes-register.superopsmsb.com kubernetes-register |

修改master节点主机的hosts文件

[root@localhost ~]# cat /etc/hosts

10.0.0.12 kubernetes-master.superopsmsb.com kubernetes-master

10.0.0.15 kubernetes-node1.superopsmsb.com kubernetes-node1

10.0.0.16 kubernetes-node2.superopsmsb.com kubernetes-node2

10.0.0.17 kubernetes-node3.superopsmsb.com kubernetes-node3

10.0.0.20 kubernetes-register.superopsmsb.com kubernetes-register

主机认证

简介

因为整个集群节点中的很多文件配置都是一样的,所以我们需要配置跨主机免密码认证方式来定制集群的认证通信机制,这样后续在批量操作命令的时候,就非常轻松了。

为了让ssh通信速度更快一些,我们需要对ssh的配置文件进行一些基本的改造

[root@localhost ~]# egrep 'APIA|DNS' /etc/ssh/sshd_config

GSSAPIAuthentication no

UseDNS no

[root@localhost ~]# systemctl restart sshd

注意:

这部分的操作,应该在所有集群主机环境初始化的时候进行设定

脚本内容

[root@localhost ~]# cat /data/scripts/01_remote_host_auth.sh

#!/bin/bash

# 功能: 批量设定远程主机免密码认证

# 版本: v0.2

# 作者: 书记

# 联系: superopsmsb.com

# 准备工作

user_dir='/root'

host_file='/etc/hosts'

login_user='root'

login_pass='123456'

target_type=(部署 免密 同步 主机名 退出)

# 菜单

menu(){

echo -e "\e[31m批量设定远程主机免密码认证管理界面\e[0m"

echo "====================================================="

echo -e "\e[32m 1: 部署环境 2: 免密认证 3: 同步hosts \e[0m"

echo -e "\e[32m 4: 设定主机名 5:退出操作 \e[0m"

echo "====================================================="

}

# expect环境

expect_install(){

if [ -f /usr/bin/expect ]

then

echo -e "\e[33mexpect环境已经部署完毕\e[0m"

else

yum install expect -y >> /dev/null 2>&1 && echo -e "\e[33mexpect软件安装完毕\e[0m" || (echo -e "\e[33mexpect软件安装失败\e[0m" && exit)

fi

}

# 秘钥文件生成环境

create_authkey(){

# 保证历史文件清空

[ -d ${user_dir}/.ssh ] && rm -rf ${user_dir}/.ssh/*

# 构建秘钥文件对

/usr/bin/ssh-keygen -t rsa -P "" -f ${user_dir}/.ssh/id_rsa

echo -e "\e[33m秘钥文件已经创建完毕\e[0m"

}

# expect自动匹配逻辑

expect_autoauth_func(){

# 接收外部参数

command="$@"

expect -c "

spawn ${command}

expect {

\"yes/no\" {send \"yes\r\"; exp_continue}

\"*password*\" {send \"${login_pass}\r\"; exp_continue}

\"*password*\" {send \"${login_pass}\r\"}

}"

}

# 跨主机传输文件认证

sshkey_auth_func(){

# 接收外部的参数

local host_list="$*"

for ip in ${host_list}

do

# /usr/bin/ssh-copy-id -i ${user_dir}/.ssh/id_rsa.pub root@10.0.0.12

cmd="/usr/bin/ssh-copy-id -i ${user_dir}/.ssh/id_rsa.pub"

remote_host="${login_user}@${ip}"

expect_autoauth_func ${cmd} ${remote_host}

done

}

# 跨主机同步hosts文件

scp_hosts_func(){

# 接收外部的参数

local host_list="$*"

for ip in ${host_list}

do

remote_host="${login_user}@${ip}"

scp ${host_file} ${remote_host}:${host_file}

done

}

# 跨主机设定主机名规划

set_hostname_func(){

# 接收外部的参数

local host_list="$*"

for ip in ${host_list}

do

host_name=$(grep ${ip} ${host_file}|awk '{print $NF}')

remote_host="${login_user}@${ip}"

ssh ${remote_host} "hostnamectl set-hostname ${host_name}"

done

}

# 帮助信息逻辑

Usage(){

echo "请输入有效的操作id"

}

# 逻辑入口

while true

do

menu

read -p "请输入有效的操作id: " target_id

if [ ${#target_type[@]} -ge ${target_id} ]

then

if [ ${target_type[${target_id}-1]} == "部署" ]

then

echo "开始部署环境操作..."

expect_install

create_authkey

elif [ ${target_type[${target_id}-1]} == "免密" ]

then

read -p "请输入需要批量远程主机认证的主机列表范围(示例: {12..19}): " num_list

ip_list=$(eval echo 10.0.0.$num_list)

echo "开始执行免密认证操作..."

sshkey_auth_func ${ip_list}

elif [ ${target_type[${target_id}-1]} == "同步" ]

then

read -p "请输入需要批量远程主机同步hosts的主机列表范围(示例: {12..19}): " num_list

ip_list=$(eval echo 10.0.0.$num_list)

echo "开始执行同步hosts文件操作..."

scp_hosts_func ${ip_list}

elif [ ${target_type[${target_id}-1]} == "主机名" ]

then

read -p "请输入需要批量设定远程主机主机名的主机列表范围(示例: {12..19}): " num_list

ip_list=$(eval echo 10.0.0.$num_list)

echo "开始执行设定主机名操作..."

set_hostname_func ${ip_list}

elif [ ${target_type[${target_id}-1]} == "退出" ]

then

echo "开始退出管理界面..."

exit

fi

else

Usage

fi

done

为了更好的把环境部署成功,最好提前更新一下软件源信息

[root@localhost ~]# yum makecache

查看脚本执行效果

[root@localhost ~]# /bin/bash /data/scripts/01_remote_host_auth.sh

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 1

开始部署环境操作...

expect环境已经部署完毕

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:u/Tzk0d9sNtG6r9Kx+6xPaENNqT3Lw178XWXQhX1yMw root@kubernetes-master

The key's randomart image is:

+---[RSA 2048]----+

| .+|

| + o.|

| E .|

| o. |

| S + +.|

| . . *=+B|

| o o+B%O|

| . o. +.O+X|

| . .o.=+XB|

+----[SHA256]-----+

秘钥文件已经创建完毕

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 2

请输入需要批量远程主机认证的主机列表范围(示例: {12..19}): 12

开始执行免密认证操作...

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.12

...

Now try logging into the machine, with: "ssh 'root@10.0.0.12'"

and check to make sure that only the key(s) you wanted were added.

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 2

请输入需要批量远程主机认证的主机列表范围(示例: {12..19}): {15..17}

开始执行免密认证操作...

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.15

...

Now try logging into the machine, with: "ssh 'root@10.0.0.15'"

and check to make sure that only the key(s) you wanted were added.

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.16

...

Now try logging into the machine, with: "ssh 'root@10.0.0.16'"

and check to make sure that only the key(s) you wanted were added.

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.17

...

Now try logging into the machine, with: "ssh 'root@10.0.0.17'"

and check to make sure that only the key(s) you wanted were added.

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 2

请输入需要批量远程主机认证的主机列表范围(示例: {12..19}): 20

开始执行免密认证操作...

spawn /usr/bin/ssh-copy-id -i /root/.ssh/id_rsa.pub root@10.0.0.20

...

Now try logging into the machine, with: "ssh 'root@10.0.0.20'"

and check to make sure that only the key(s) you wanted were added.

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 3

请输入需要批量远程主机同步hosts的主机列表范围(示例: {12..19}): 12

开始执行同步hosts文件操作...

hosts 100% 470 1.2MB/s 00:00

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 3

请输入需要批量远程主机同步hosts的主机列表范围(示例: {12..19}): {15..17}

开始执行同步hosts文件操作...

hosts 100% 470 226.5KB/s 00:00

hosts 100% 470 458.8KB/s 00:00

hosts 100% 470 533.1KB/s 00:00

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 3

请输入需要批量远程主机同步hosts的主机列表范围(示例: {12..19}): 20

开始执行同步hosts文件操作...

hosts 100% 470 287.8KB/s 00:00

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 4

请输入需要批量设定远程主机主机名的主机列表范围(示例: {12..19}): 12

开始执行设定主机名操作...

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 4

请输入需要批量设定远程主机主机名的主机列表范围(示例: {12..19}): {15..17}

开始执行设定主机名操作...

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 4

请输入需要批量设定远程主机主机名的主机列表范围(示例: {12..19}): 20

开始执行设定主机名操作...

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 5

开始退出管理界面...

检查效果

[root@localhost ~]# exec /bin/bash

[root@kubernetes-master ~]# for i in 12 {15..17} 20

> do

> name=$(ssh root@10.0.0.$i "hostname")

> echo 10.0.0.$i $name

> done

10.0.0.12 kubernetes-master

10.0.0.15 kubernetes-node1

10.0.0.16 kubernetes-node2

10.0.0.17 kubernetes-node3

10.0.0.20 kubernetes-register

小结

1.1.2 集群基础环境¶

学习目标

这一节,我们从 内核调整、软件源配置、小结 三个方面来学习。

内核调整

简介

根据kubernetes官方资料的相关信息,我们需要对kubernetes集群是所有主机进行内核参数的调整。

禁用swap

部署集群时,kubeadm默认会预先检查当前主机是否禁用了Swap设备,并在未禁用时强制终止部署过程。因此,在主机内存资源充裕的条件下,需要禁用所有的Swap设备,否则,就需要在后文的kubeadm init及kubeadm join命令执行时额外使用相关的选项忽略检查错误

关闭Swap设备,需要分两步完成。

首先是关闭当前已启用的所有Swap设备:

swapoff -a

而后编辑/etc/fstab配置文件,注释用于挂载Swap设备的所有行。

方法一:手工编辑

vim /etc/fstab

# UUID=0a55fdb5-a9d8-4215-80f7-f42f75644f69 none swap sw 0 0

方法二:

sed -i 's/.*swap.*/#&/' /etc/fstab

替换后位置的&代表前面匹配的整行内容

注意:

只需要注释掉自动挂载SWAP分区项即可,防止机子重启后swap启用

内核(禁用swap)参数

cat >> /etc/sysctl.d/k8s.conf << EOF

vm.swappiness=0

EOF

sysctl -p /etc/sysctl.d/k8s.conf

网络参数

配置iptables参数,使得流经网桥的流量也经过iptables/netfilter防火墙

cat >> /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

配置生效

modprobe br_netfilter

modprobe overlay

sysctl -p /etc/sysctl.d/k8s.conf

脚本方式

[root@localhost ~]# cat /data/scripts/02_kubernetes_kernel_conf.sh

#!/bin/bash

# 功能: 批量设定kubernetes的内核参数调整

# 版本: v0.1

# 作者: 书记

# 联系: superopsmsb.com

# 禁用swap

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

cat >> /etc/sysctl.d/k8s.conf << EOF

vm.swappiness=0

EOF

sysctl -p /etc/sysctl.d/k8s.conf

# 打开网络转发

cat >> /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# 加载相应的模块

modprobe br_netfilter

modprobe overlay

sysctl -p /etc/sysctl.d/k8s.conf

脚本执行

master主机执行效果

[root@kubernetes-master ~]# /bin/bash /data/scripts/02_kubernetes_kernel_conf.sh

vm.swappiness = 0

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

node主机执行效果

[root@kubernetes-master ~]# for i in {15..17};do ssh root@10.0.0.$i mkdir /data/scripts -p; scp /data/scripts/02_kubernetes_kernel_conf.sh root@10.0.0.$i:/data/scripts/02_kubernetes_kernel_conf.sh;ssh root@10.0.0.$i "/bin/bash /data/scripts/02_kubernetes_kernel_conf.sh";done

02_kubernetes_kernel_conf.sh 100% 537 160.6KB/s 00:00

vm.swappiness = 0

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

02_kubernetes_kernel_conf.sh 100% 537 374.4KB/s 00:00

vm.swappiness = 0

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

02_kubernetes_kernel_conf.sh 100% 537 154.5KB/s 00:00

vm.swappiness = 0

vm.swappiness = 0

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

软件源配置

简介

由于我们需要在多台主机上初始化kubernetes主机环境,所以我们需要在多台主机上配置kubernetes的软件源,以最简便的方式部署kubernetes环境。

定制阿里云软件源

定制阿里云的关于kubernetes的软件源

[root@kubernetes-master ~]# cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

更新软件源

[root@kubernetes-master ~]# yum makecache fast

其他节点主机同步软件源

[root@kubernetes-master ~]# for i in {15..17};do scp /etc/yum.repos.d/kubernetes.repo root@10.0.0.$i:/etc/yum.repos.d/kubernetes.repo;ssh root@10.0.0.$i "yum makecache fast";done

小结

1.1.3 容器环境¶

学习目标

这一节,我们从 Docker环境、环境配置、小结 三个方面来学习。

Docker环境

简介

由于kubernetes1.24版本才开始将默认支持的容器引擎转换为了Containerd了,所以这里我们还是以Docker软件作为后端容器的引擎,因为目前的CKA考试环境是以kubernetes1.23版本为基准的。

软件源配置

安装基础依赖软件

[root@kubernetes-master ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

定制专属的软件源

[root@kubernetes-master ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装软件

确定最新版本的docker

[root@kubernetes-master ~]# yum list docker-ce --showduplicates | sort -r

安装最新版本的docker

[root@kubernetes-master ~]# yum install -y docker-ce

检查效果

启动docker服务

[root@kubernetes-master ~]# systemctl restart docker

检查效果

[root@kubernetes-master ~]# docker version

Client: Docker Engine - Community

Version: 20.10.17

API version: 1.41

Go version: go1.17.11

Git commit: 100c701

Built: Mon Jun 6 23:05:12 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.17

API version: 1.41 (minimum version 1.12)

Go version: go1.17.11

Git commit: a89b842

Built: Mon Jun 6 23:03:33 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.6.6

GitCommit: 10c12954828e7c7c9b6e0ea9b0c02b01407d3ae1

runc:

Version: 1.1.2

GitCommit: v1.1.2-0-ga916309

docker-init:

Version: 0.19.0

GitCommit: de40ad0

环境配置

需求

1 镜像仓库

默认安装的docker会从官方网站上获取docker镜像,有时候会因为网络因素无法获取,所以我们需要配置国内镜像仓库的加速器

2 kubernetes的改造

kubernetes的创建容器,需要借助于kubelet来管理Docker,而默认的Docker服务进程的管理方式不是kubelet的类型,所以需要改造Docker的服务启动类型为systemd方式。

注意:

默认情况下,Docker的服务修改有两种方式:

1 Docker服务 - 需要修改启动服务文件,需要重载服务文件,比较繁琐。

2 daemon.json文件 - 修改文件后,只需要重启docker服务即可,该文件默认情况下不存在。

定制docker配置文件

定制配置文件

[root@kubernetes-master ~]# cat >> /etc/docker/daemon.json <<-EOF

{

"registry-mirrors": [

"http://74f21445.m.daocloud.io",

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"insecure-registries": ["10.0.0.20:80"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

重启docker服务

[root@kubernetes-master ~]# systemctl restart docker

检查效果

查看配置效果

[root@kubernetes-master ~]# docker info

Client:

...

Server:

...

Cgroup Driver: systemd

...

Insecure Registries:

10.0.0.20:80

127.0.0.0/8

Registry Mirrors:

http://74f21445.m.daocloud.io/

https://registry.docker-cn.com/

http://hub-mirror.c.163.com/

https://docker.mirrors.ustc.edu.cn/

Live Restore Enabled: false

docker环境定制脚本

查看脚本内容

[root@localhost ~]# cat /data/scripts/03_kubernetes_docker_install.sh

#!/bin/bash

# 功能: 安装部署Docker容器环境

# 版本: v0.1

# 作者: 书记

# 联系: superopsmsb.com

# 准备工作

# 软件源配置

softs_base(){

# 安装基础软件

yum install -y yum-utils device-mapper-persistent-data lvm2

# 定制软件仓库源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 更新软件源

systemctl restart network

yum makecache fast

}

# 软件安装

soft_install(){

# 安装最新版的docker软件

yum install -y docker-ce

# 重启docker服务

systemctl restart docker

}

# 加速器配置

speed_config(){

# 定制加速器配置

cat > /etc/docker/daemon.json <<-EOF

{

"registry-mirrors": [

"http://74f21445.m.daocloud.io",

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"insecure-registries": ["10.0.0.20:80"],

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

# 重启docker服务

systemctl restart docker

}

# 环境监测

docker_check(){

process_name=$(docker info | grep 'p D' | awk '{print $NF}')

[ "${process_name}" == "systemd" ] && echo "Docker软件部署完毕" || (echo "Docker软件部署失败" && exit)

}

# 软件部署

main(){

softs_base

soft_install

speed_config

docker_check

}

# 调用主函数

main

其他主机环境部署docker

[root@kubernetes-master ~]# for i in {15..17} 20; do ssh root@10.0.0.$i "mkdir -p /data/scripts"; scp /data/scripts/03_kubernetes_docker_install.sh root@10.0.0.$i:/data/scripts/03_kubernetes_docker_install.sh; done

其他主机各自执行下面的脚本

/bin/bash /data/scripts/03_kubernetes_docker_install.sh

小结

1.1.4 仓库环境¶

学习目标

这一节,我们从 容器仓库、Harbor实践、小结 三个方面来学习。

容器仓库

简介

Harbor是一个用于存储和分发Docker镜像的企业级Registry服务器,虽然Docker官方也提供了公共的镜像仓库,但是从安全和效率等方面考虑,部署企业内部的私有环境Registry是非常必要的,Harbor除了存储和分发镜像外还具有用户管理,项目管理,配置管理和日志查询,高可用部署等主要功能。

在本地搭建一个Harbor服务,其他在同一局域网的机器可以使用Harbor进行镜像提交和拉取,搭建前需要本地安装docker服务和docker-compose服务。

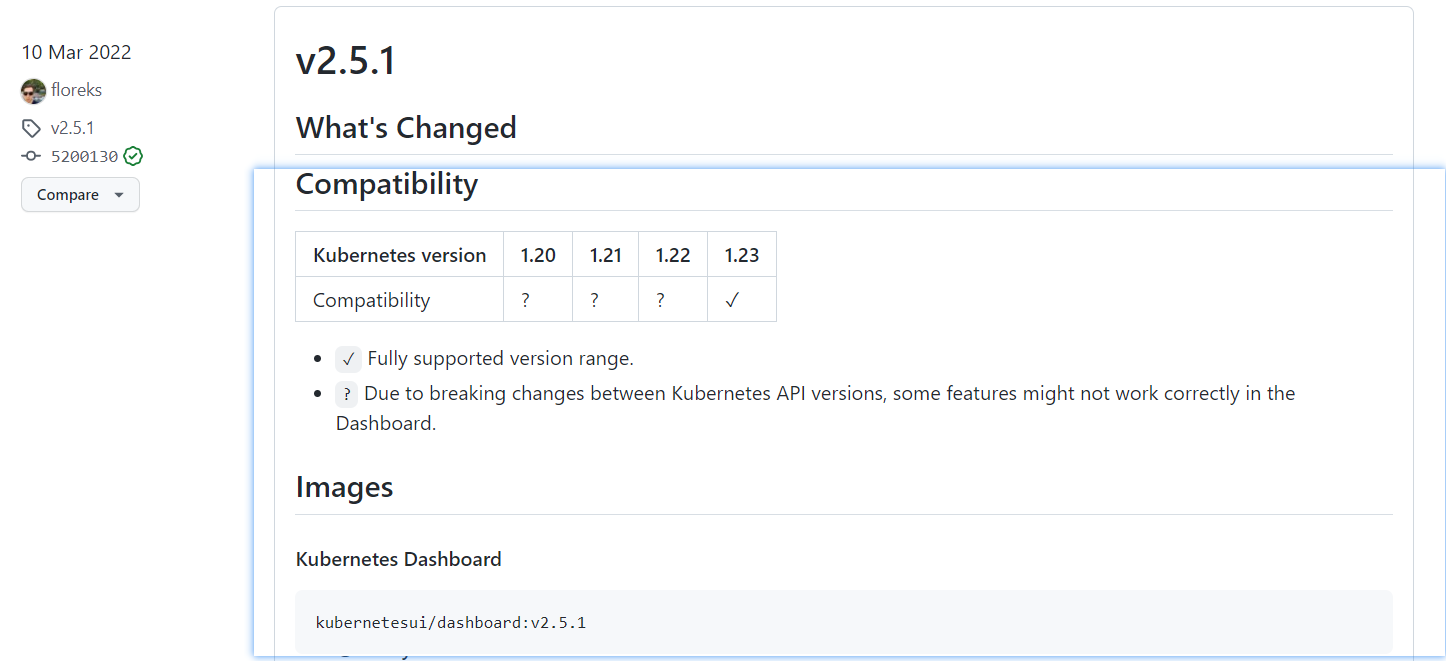

最新的软件版本是 2.5.3,我们这里采用 2.5.0版本。

compose部署

安装docker-compose

[root@kubernetes-register ~]# yum install -y docker-compose

harbor部署

下载软件

[root@kubernetes-register ~]# mkdir /data/{softs,server} -p

[root@kubernetes-register ~]# cd /data/softs

[root@kubernetes-register ~]# wget https://github.com/goharbor/harbor/releases/download/v2.5.0/harbor-offline-installer-v2.5.0.tgz

解压软件

[root@kubernetes-register ~]# tar -zxvf harbor-offline-installer-v2.5.0.tgz -C /data/server/

[root@kubernetes-register ~]# cd /data/server/harbor/

加载镜像

[root@kubernetes-register /data/server/harbor]# docker load < harbor.v2.5.0.tar.gz

[root@kubernetes-register /data/server/harbor]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

goharbor/harbor-exporter v2.5.0 36396f138dfb 3 months ago 86.7MB

goharbor/chartmuseum-photon v2.5.0 eaedcf1f700b 3 months ago 225MB

goharbor/redis-photon v2.5.0 1e00fcc9ae63 3 months ago 156MB

goharbor/trivy-adapter-photon v2.5.0 4e24a6327c97 3 months ago 164MB

goharbor/notary-server-photon v2.5.0 6d5fe726af7f 3 months ago 112MB

goharbor/notary-signer-photon v2.5.0 932eed8b6e8d 3 months ago 109MB

goharbor/harbor-registryctl v2.5.0 90ef6b10ab31 3 months ago 136MB

goharbor/registry-photon v2.5.0 30e130148067 3 months ago 77.5MB

goharbor/nginx-photon v2.5.0 5041274b8b8a 3 months ago 44MB

goharbor/harbor-log v2.5.0 89fd73f9714d 3 months ago 160MB

goharbor/harbor-jobservice v2.5.0 1d097e877be4 3 months ago 226MB

goharbor/harbor-core v2.5.0 42a54bc05b02 3 months ago 202MB

goharbor/harbor-portal v2.5.0 c206e936f4f9 3 months ago 52.3MB

goharbor/harbor-db v2.5.0 d40a1ae87646 3 months ago 223MB

goharbor/prepare v2.5.0 36539574668f 3 months ago 268MB

备份配置

[root@kubernetes-register /data/server/harbor]# cp harbor.yml.tmpl harbor.yml

修改配置

[root@kubernetes-register /data/server/harbor]# vim harbor.yml.tmpl

# 修改主机名

hostname: kubernetes-register.superopsmsb.com

http:

port: 80

#https: 注释ssl相关的部分

# port: 443

# certificate: /your/certificate/path

# private_key: /your/private/key/path

# 修改harbor的登录密码

harbor_admin_password: 123456

# 设定harbor的数据存储目录

data_volume: /data/server/harbor/data

配置harbor

[root@kubernetes-register /data/server/harbor]# ./prepare

prepare base dir is set to /data/server/harbor

WARNING:root:WARNING: HTTP protocol is insecure. Harbor will deprecate http protocol in the future. Please make sure to upgrade to https

...

Generated configuration file: /compose_location/docker-compose.yml

Clean up the input dir

启动harbor

[root@kubernetes-register /data/server/harbor]# ./install.sh

[Step 0]: checking if docker is installed ...

...

[Step 1]: checking docker-compose is installed ...

...

[Step 2]: loading Harbor images ...

...

Loaded image: goharbor/harbor-exporter:v2.5.0

[Step 3]: preparing environment ...

...

[Step 4]: preparing harbor configs ...

...

[Step 5]: starting Harbor ...

...

✔ ----Harbor has been installed and started successfully.----

检查效果

[root@kubernetes-register /data/server/harbor]# docker-compose ps

Name Command State Ports

-------------------------------------------------------------------------------------------------

harbor-core /harbor/entrypoint.sh Up

harbor-db /docker-entrypoint.sh 96 13 Up

harbor-jobservice /harbor/entrypoint.sh Up

harbor-log /bin/sh -c /usr/local/bin/ ... Up 127.0.0.1:1514->10514/tcp

harbor-portal nginx -g daemon off; Up

nginx nginx -g daemon off; Up 0.0.0.0:80->8080/tcp,:::80->8080/tcp

redis redis-server /etc/redis.conf Up

registry /home/harbor/entrypoint.sh Up

registryctl /home/harbor/start.sh Up

定制服务启动脚本

定制服务启动脚本

[root@kubernetes-register /data/server/harbor]# cat /lib/systemd/system/harbor.service

[Unit]

Description=Harbor

After=docker.service systemd-networkd.service systemd-resolved.service

Requires=docker.service

Documentation=http://github.com/vmware/harbor

[Service]

Type=simple

Restart=on-failure

RestartSec=5

#需要注意harbor的安装位置

ExecStart=/usr/bin/docker-compose --file /data/server/harbor/docker-compose.yml up

ExecStop=/usr/bin/docker-compose --file /data/server/harbor/docker-compose.yml down

[Install]

WantedBy=multi-user.target

加载服务配置文件

[root@kubernetes-register /data/server/harbor]# systemctl daemon-reload

启动服务

[root@kubernetes-register /data/server/harbor]# systemctl start harbor

检查状态

[root@kubernetes-register /data/server/harbor]# systemctl status harbor

设置开机自启动

[root@kubernetes-register /data/server/harbor]# systemctl enable harbor

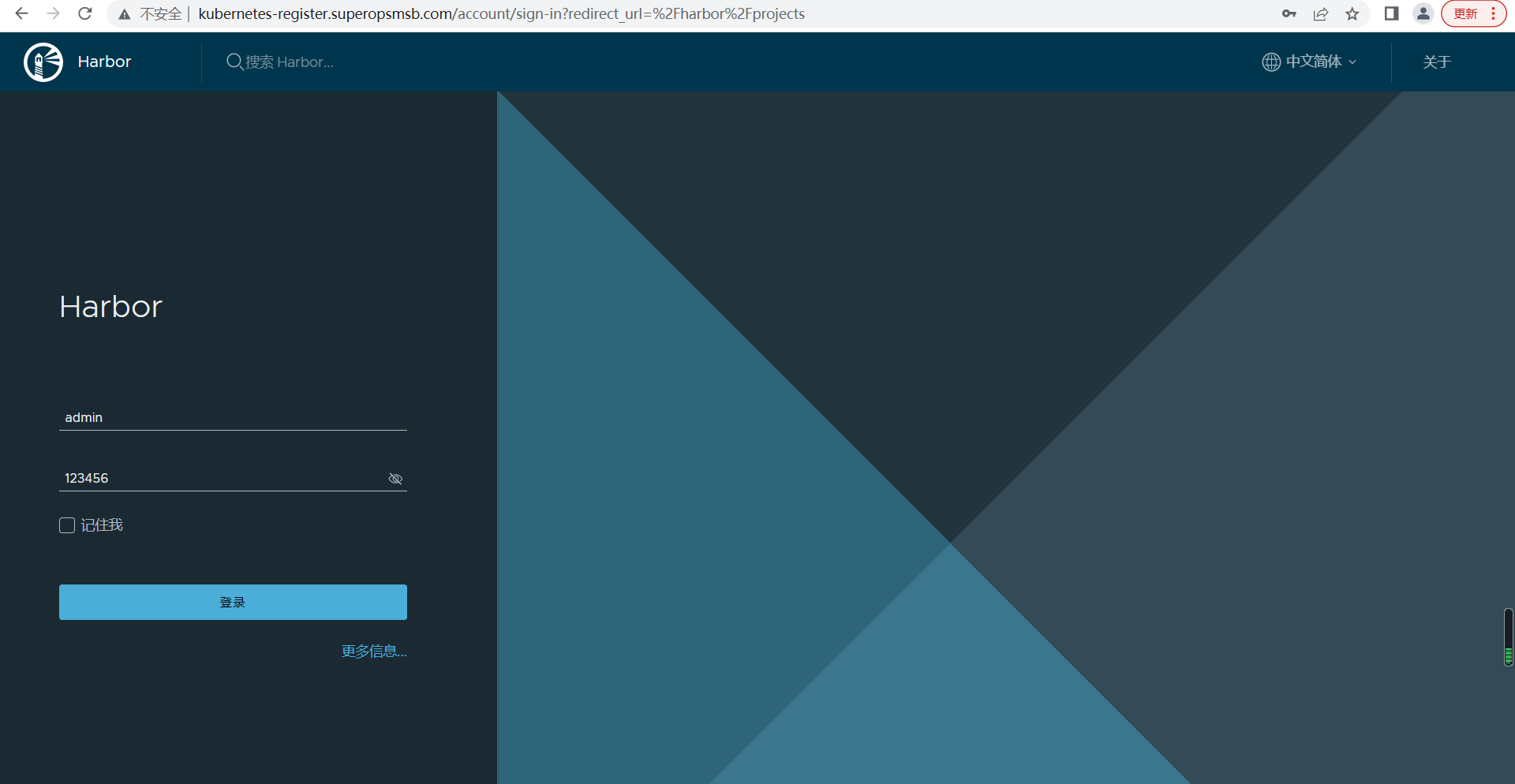

Harbor实践

windows定制harbor的访问域名

10.0.0.20 kubernetes-register.superopsmsb.com

浏览器访问域名,用户名: admin, 密码:123456

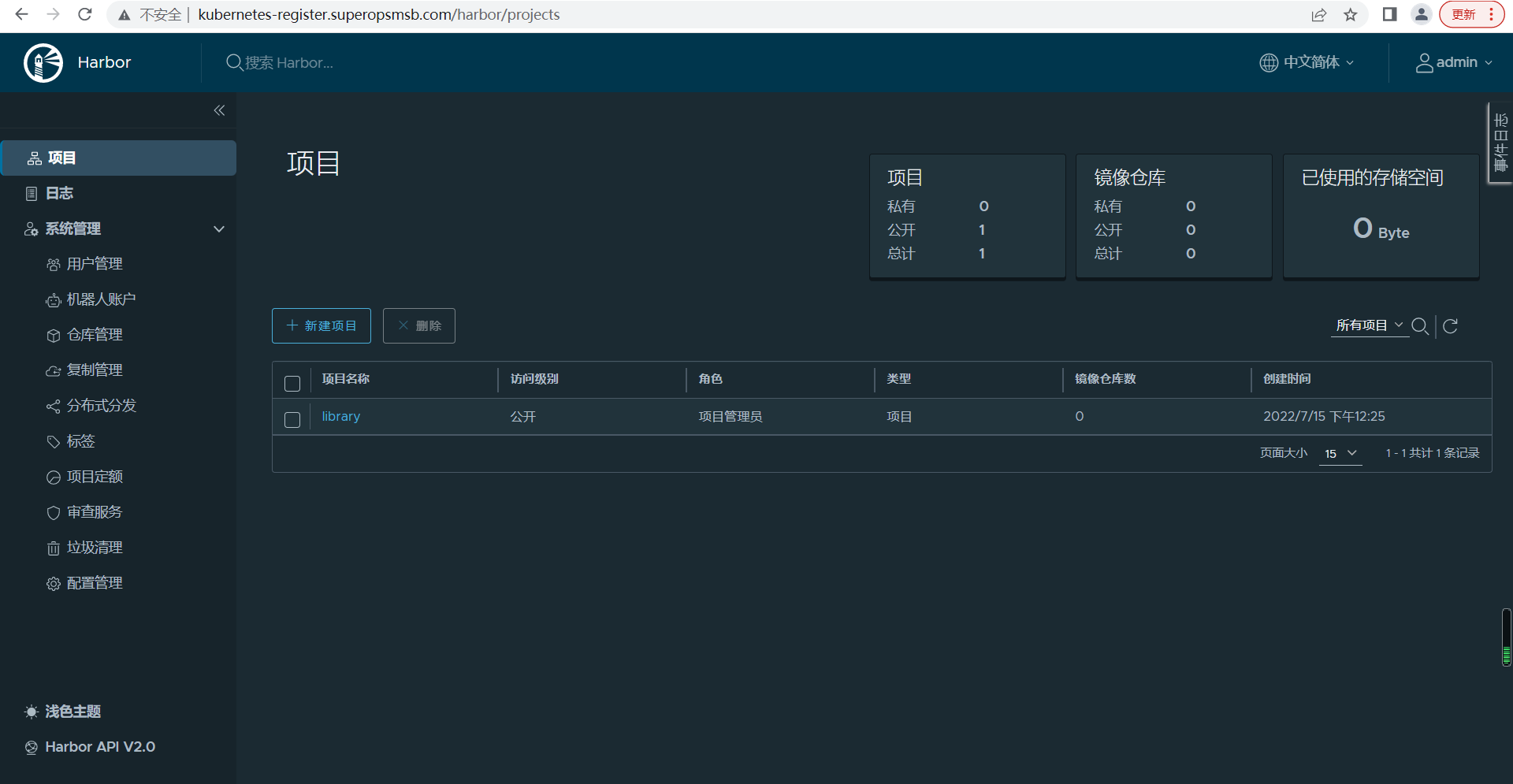

输入用户名和密码后,点击登录,查看harbor的首页效果

创建工作账号

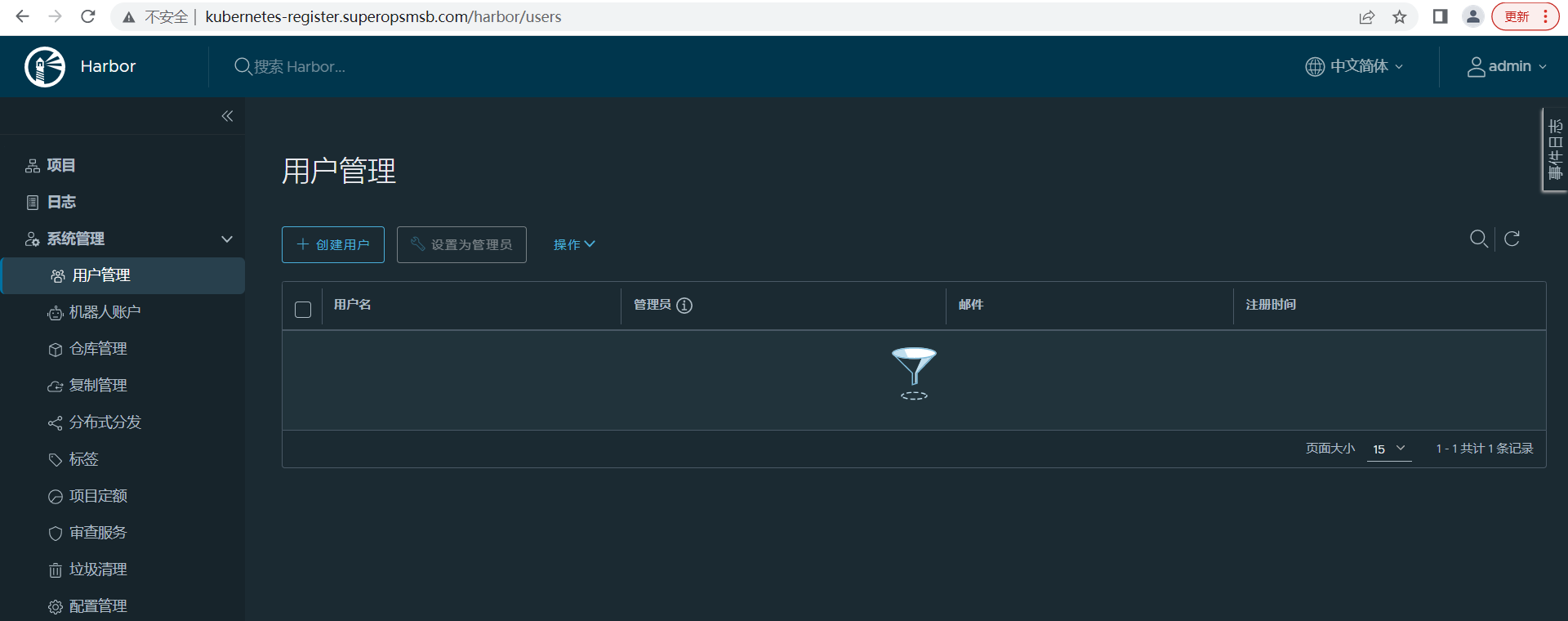

点击用户管理,进入用户创建界面

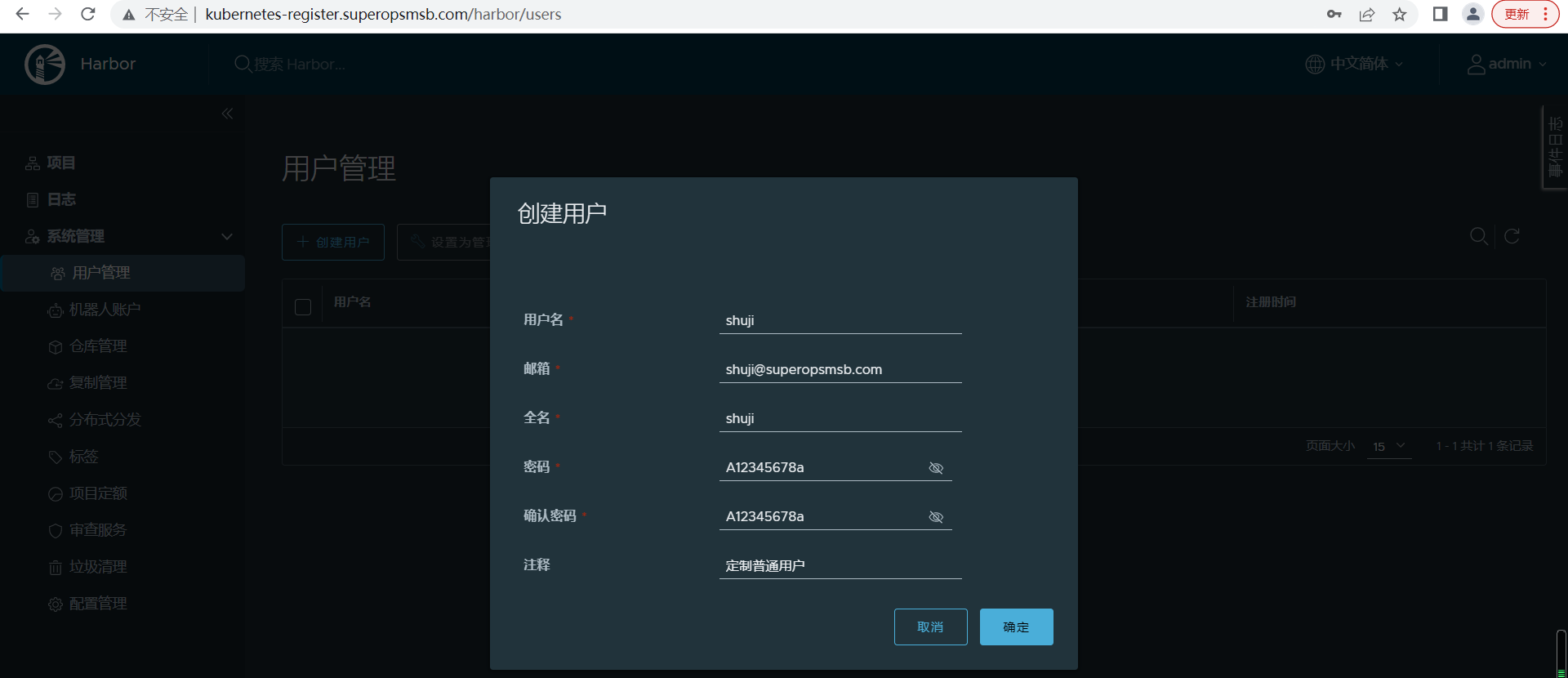

点击创建用户,进入用户创建界面

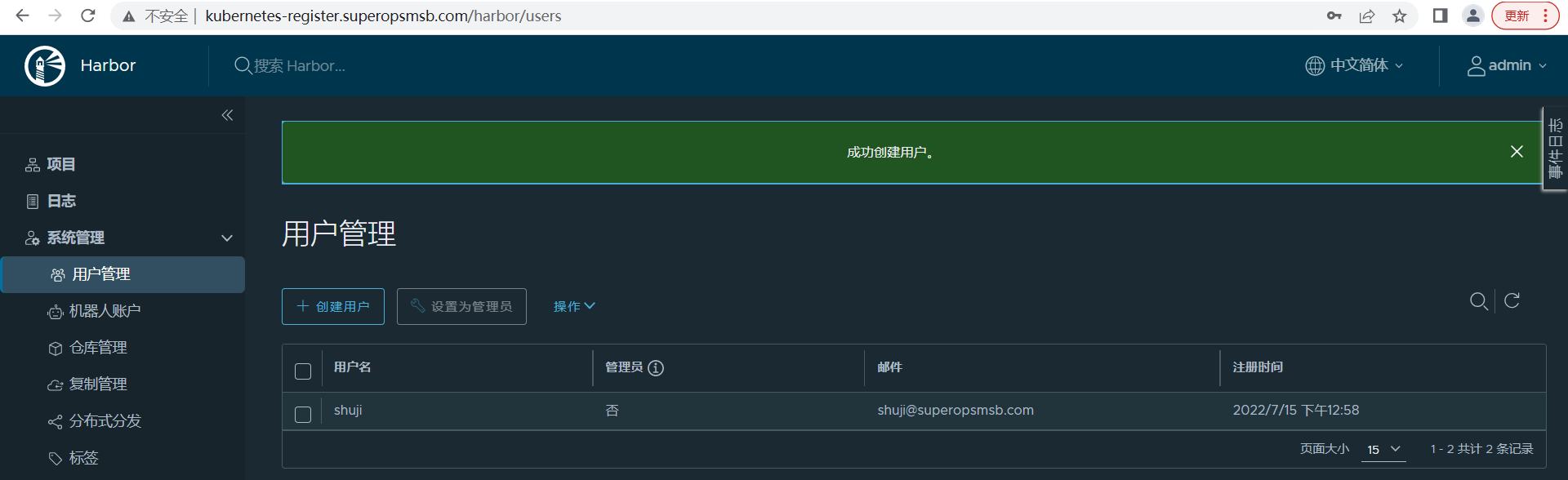

点击确定后,查看创建用户效果

点击左上角的管理员名称,退出终端页面

仓库管理

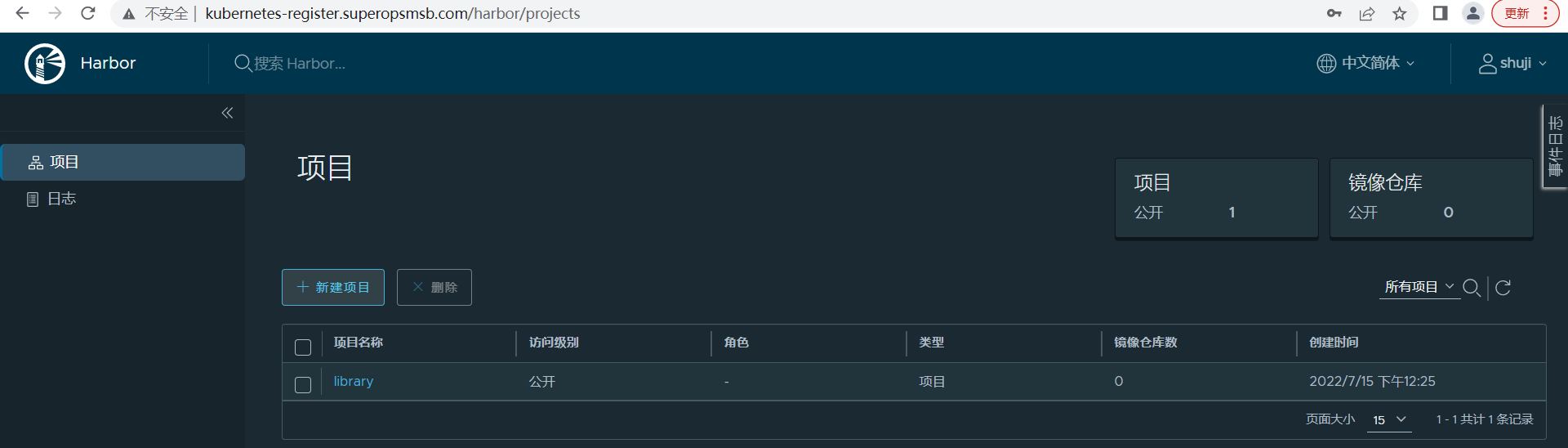

采用普通用户登录到harbor中

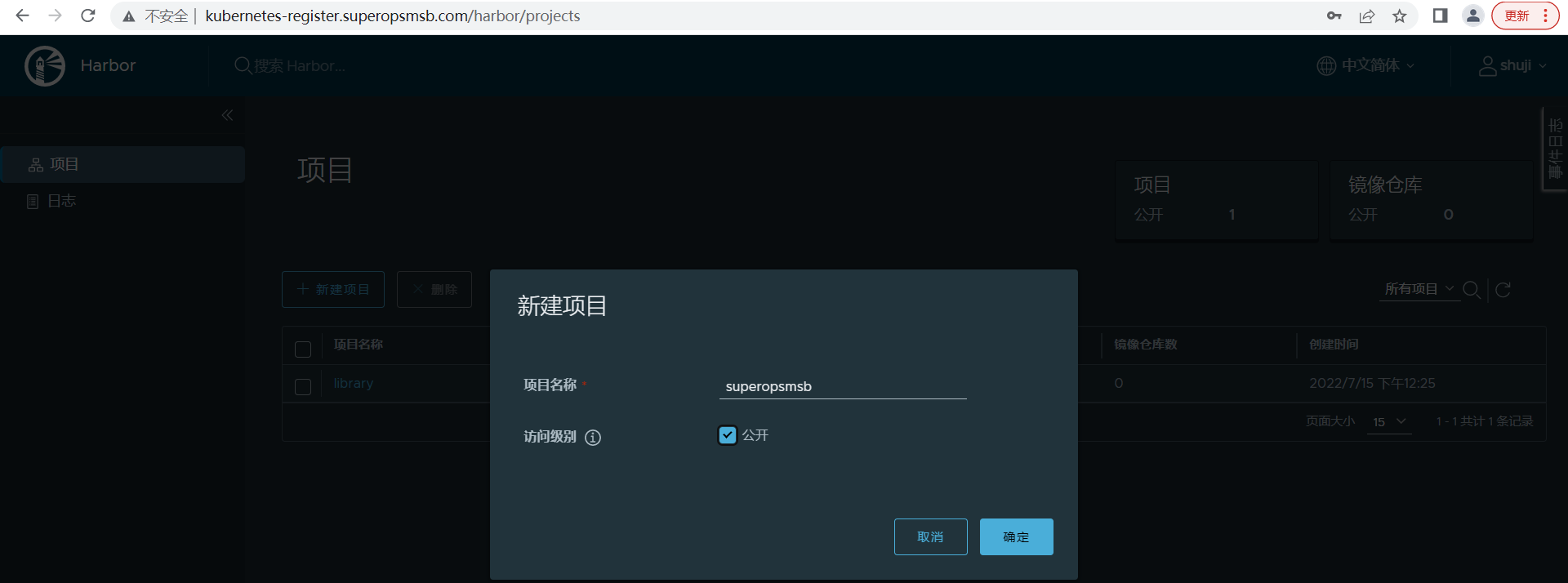

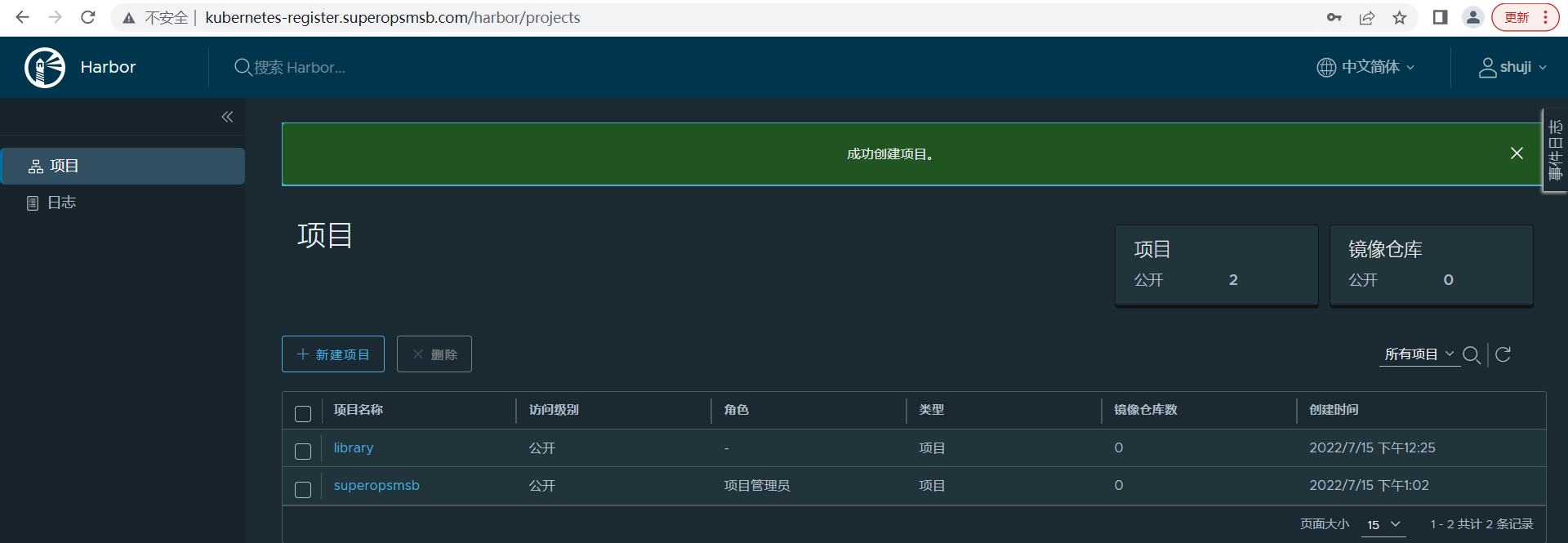

创建shuji用户专用的项目仓库,名称为 superopsmsb,权限为公开的

点击确定后,查看效果

提交镜像

准备docker的配置文件

[root@kubernetes-master ~]# grep insecure /etc/docker/daemon.json

"insecure-registries": ["kubernetes-register.superopsmsb.com"],

[root@kubernetes-master ~]# systemctl restart docker

[root@kubernetes-master ~]# docker info | grep -A2 Insecure

Insecure Registries:

kubernetes-register.superopsmsb.com

127.0.0.0/8

登录仓库

[root@kubernetes-master ~]# docker login kubernetes-register.superopsmsb.com -u shuji

Password: # 输入登录密码 A12345678a

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

下载镜像

[root@kubernetes-master ~]# docker pull busybox

定制镜像标签

[root@kubernetes-master ~]# docker tag busybox kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

推送镜像

[root@kubernetes-master ~]# docker push kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

The push refers to repository [kubernetes-register.superopsmsb.com/superopsmsb/busybox]

7ad00cd55506: Pushed

v0.1: digest: sha256:dcdf379c574e1773d703f0c0d56d67594e7a91d6b84d11ff46799f60fb081c52 size: 527

删除镜像

[root@kubernetes-master ~]# docker rmi busybox kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

下载镜像

[root@kubernetes-master ~]# docker pull kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

v0.1: Pulling from superopsmsb/busybox

19d511225f94: Pull complete

Digest: sha256:dcdf379c574e1773d703f0c0d56d67594e7a91d6b84d11ff46799f60fb081c52

Status: Downloaded newer image for kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

kubernetes-register.superopsmsb.com/superopsmsb/busybox:v0.1

结果显示:

我们的harbor私有仓库就构建好了

同步所有的docker配置

同步所有主机的docker配置

[root@kubernetes-master ~]# for i in 15 16 17;do scp /etc/docker/daemon.json root@10.0.0.$i:/etc/docker/daemon.json; ssh root@10.0.0.$i "systemctl restart docker"; done

daemon.json 100% 299 250.0KB/s 00:00

daemon.json 100% 299 249.6KB/s 00:00

daemon.json 100% 299 243.5KB/s 00:00

小结

1.1.5 master环境部署¶

学习目标

这一节,我们从 软件安装、环境初始化、小结 三个方面来学习。

软件安装

简介

我们已经把kubernetes集群所有主机的软件源配置完毕了,所以接下来,我们需要做的就是如何部署kubernetes环境

软件安装

查看默认的最新版本

[root@kubernetes-master ~]# yum list kubeadm

已加载插件:fastestmirror

Loading mirror speeds from cached hostfile

可安装的软件包

kubeadm.x86_64 1.24.3-0 kubernetes

查看软件的最近版本

[root@kubernetes-master ~]# yum list kubeadm --showduplicates | sort -r | grep 1.2

kubeadm.x86_64 1.24.3-0 kubernetes

kubeadm.x86_64 1.24.2-0 kubernetes

kubeadm.x86_64 1.24.1-0 kubernetes

kubeadm.x86_64 1.24.0-0 kubernetes

kubeadm.x86_64 1.23.9-0 kubernetes

kubeadm.x86_64 1.23.8-0 kubernetes

...

安装制定版本

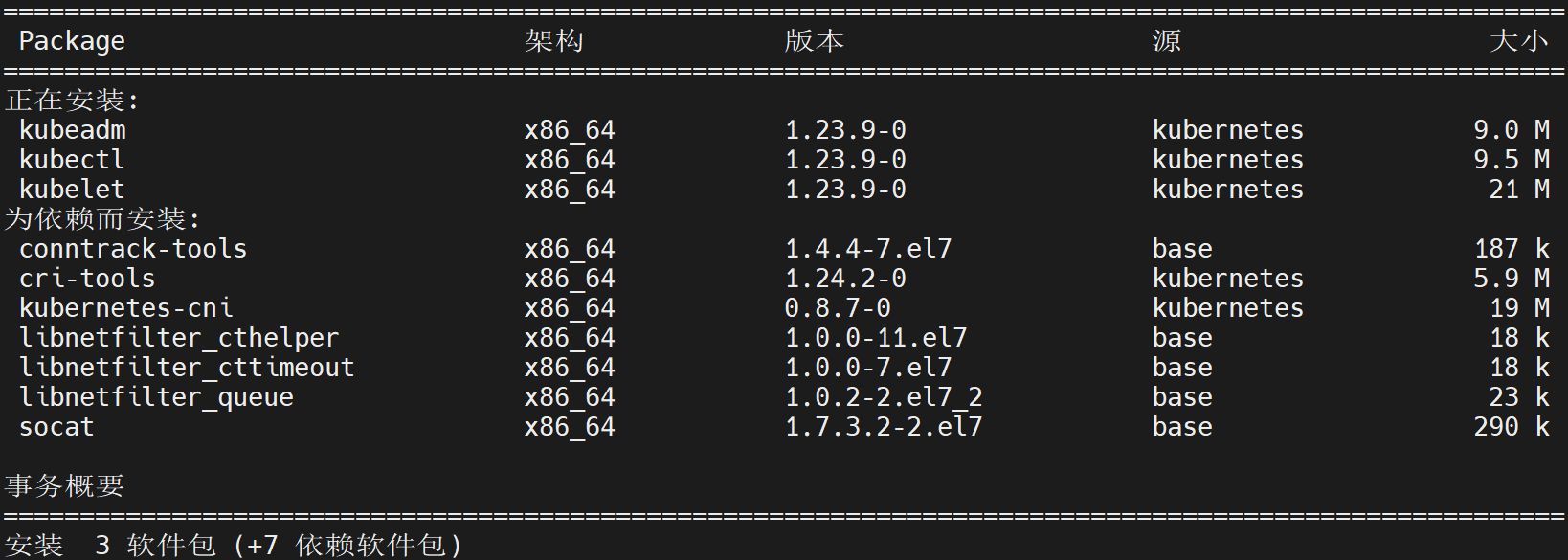

[root@kubernetes-master ~]# yum install kubeadm-1.23.9-0 kubectl-1.23.9-0 kubelet-1.23.9-0 -y

注意:

核心软件解析

kubeadm 主要是对k8s集群来进行管理的,所以在master角色主机上安装

kubelet 是以服务的方式来进行启动,主要用于收集节点主机的信息

kubectl 主要是用来对集群中的资源对象进行管控,一半情况下,node角色的节点是不需要安装的。

依赖软件解析

libnetfilter_xxx是Linux系统下网络数据包过滤的配置工具

kubernetes-cni是容器网络通信的软件

socat是kubelet的依赖

cri-tools是CRI容器运行时接口的命令行工具

命令解读

查看集群初始化命令

[root@kubernetes-master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.9", GitCommit:"c1de2d70269039fe55efb98e737d9a29f9155246", GitTreeState:"clean", BuildDate:"2022-07-13T14:25:37Z", GoVersion:"go1.17.11", Compiler:"gc", Platform:"linux/amd64"}

[root@kubernetes-master ~]# kubeadm --help

┌──────────────────────────────────────────────────────────┐

│ KUBEADM │

│ Easily bootstrap a secure Kubernetes cluster │

│ │

│ Please give us feedback at: │

│ https://github.com/kubernetes/kubeadm/issues │

└──────────────────────────────────────────────────────────┘

Example usage:

Create a two-machine cluster with one control-plane node

(which controls the cluster), and one worker node

(where your workloads, like Pods and Deployments run).

┌──────────────────────────────────────────────────────────┐

│ On the first machine: │

├──────────────────────────────────────────────────────────┤

│ control-plane# kubeadm init │

└──────────────────────────────────────────────────────────┘

┌──────────────────────────────────────────────────────────┐

│ On the second machine: │

├──────────────────────────────────────────────────────────┤

│ worker# kubeadm join <arguments-returned-from-init> │

└──────────────────────────────────────────────────────────┘

You can then repeat the second step on as many other machines as you like.

Usage:

kubeadm [command]

Available Commands:

certs Commands related to handling kubernetes certificates

completion Output shell completion code for the specified shell (bash or zsh)

config Manage configuration for a kubeadm cluster persisted in a ConfigMap in the cluster

help Help about any command

init Run this command in order to set up the Kubernetes control plane

join Run this on any machine you wish to join an existing cluster

kubeconfig Kubeconfig file utilities

reset Performs a best effort revert of changes made to this host by 'kubeadm init' or 'kubeadm join'

token Manage bootstrap tokens

upgrade Upgrade your cluster smoothly to a newer version with this command

version Print the version of kubeadm

Flags:

--add-dir-header If true, adds the file directory to the header of the log messages

-h, --help help for kubeadm

--log-file string If non-empty, use this log file

--log-file-max-size uint Defines the maximum size a log file can grow to. Unit is megabytes. If the value is 0, the maximum file size is unlimited. (default 1800)

--one-output If true, only write logs to their native severity level (vs also writing to each lower severity level)

--rootfs string [EXPERIMENTAL] The path to the 'real' host root filesystem.

--skip-headers If true, avoid header prefixes in the log messages

--skip-log-headers If true, avoid headers when opening log files

-v, --v Level number for the log level verbosity

Additional help topics:

kubeadm alpha Kubeadm experimental sub-commands

Use "kubeadm [command] --help" for more information about a command.

信息查看

查看集群初始化时候的默认配置

[root@kubernetes-master ~]# kubeadm config print init-defaults

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

imagePullPolicy: IfNotPresent

name: node

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: 1.23.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

注意:

可以重点关注,master和node的注释信息、镜像仓库和子网信息

这条命令可以生成定制的kubeadm.conf认证文件

检查当前版本的kubeadm所依赖的镜像版本

[root@kubernetes-master ~]# kubeadm config images list

I0715 16:08:07.269149 7605 version.go:255] remote version is much newer: v1.24.3; falling back to: stable-1.23

k8s.gcr.io/kube-apiserver:v1.23.9

k8s.gcr.io/kube-controller-manager:v1.23.9

k8s.gcr.io/kube-scheduler:v1.23.9

k8s.gcr.io/kube-proxy:v1.23.9

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

检查指定版本的kubeadm所依赖的镜像版本

[root@kubernetes-master ~]# kubeadm config images list --kubernetes-version=v1.23.6

k8s.gcr.io/kube-apiserver:v1.23.6

k8s.gcr.io/kube-controller-manager:v1.23.6

k8s.gcr.io/kube-scheduler:v1.23.6

k8s.gcr.io/kube-proxy:v1.23.6

k8s.gcr.io/pause:3.6

k8s.gcr.io/etcd:3.5.1-0

k8s.gcr.io/coredns/coredns:v1.8.6

拉取环境依赖的镜像

预拉取镜像文件

[root@kubernetes-master ~]# kubeadm config images pull

I0715 16:09:24.185598 7624 version.go:255] remote version is much newer: v1.24.3; falling back to: stable-1.23

failed to pull image "k8s.gcr.io/kube-apiserver:v1.23.9": output: Error response from daemon: Get "https://k8s.gcr.io/v2/": dial tcp 142.250.157.82:443: connect: connection timed out

, error: exit status 1

To see the stack trace of this error execute with --v=5 or higher

注意:

由于默认情况下,这些镜像是从一个我们访问不到的网站上拉取的,所以这一步在没有实现科学上网的前提下,不要执行。

推荐在初始化之前,更换一下镜像仓库,提前获取文件,比如我们可以从

registry.aliyuncs.com/google_containers/镜像名称 获取镜像文件

镜像获取脚本内容

[root@localhost ~]# cat /data/scripts/04_kubernetes_get_images.sh

#!/bin/bash

# 功能: 获取kubernetes依赖的Docker镜像文件

# 版本: v0.1

# 作者: 书记

# 联系: superopsmsb.com

# 定制普通环境变量

ali_mirror='registry.aliyuncs.com'

harbor_mirror='kubernetes-register.superopsmsb.com'

harbor_repo='google_containers'

# 环境定制

kubernetes_image_get(){

# 获取脚本参数

kubernetes_version="$1"

# 获取制定kubernetes版本所需镜像

images=$(kubeadm config images list --kubernetes-version=${kubernetes_version} | awk -F "/" '{print $NF}')

# 获取依赖镜像

for i in ${images}

do

docker pull ${ali_mirror}/${harbor_repo}/$i

docker tag ${ali_mirror}/${harbor_repo}/$i ${harbor_mirror}/${harbor_repo}/$i

docker rmi ${ali_mirror}/${harbor_repo}/$i

done

}

# 脚本的帮助

Usage(){

echo "/bin/bash $0 "

}

# 脚本主流程

if [ $# -eq 0 ]

then

read -p "请输入要获取kubernetes镜像的版本(示例: v1.23.9): " kubernetes_version

kubernetes_image_get ${kubernetes_version}

else

Usage

fi

脚本执行效果

[root@kubernetes-master ~]# /bin/bash /data/scripts/04_kubernetes_get_images.sh

请输入要获取kubernetes镜像的版本(示例: v1.23.9): v1.23.9

...

查看镜像

[root@kubernetes-master ~]# docker images

[root@kubernetes-master ~]# docker images | awk '{print $1,$2}'

REPOSITORY TAG

kubernetes-register.superopsmsb.com/google_containers/kube-apiserver v1.23.9

kubernetes-register.superopsmsb.com/google_containers/kube-controller-manager v1.23.9

kubernetes-register.superopsmsb.com/google_containers/kube-scheduler v1.23.9

kubernetes-register.superopsmsb.com/google_containers/kube-proxy v1.23.9

kubernetes-register.superopsmsb.com/google_containers/etcd 3.5.1-0

kubernetes-register.superopsmsb.com/google_containers/coredns v1.8.6

kubernetes-register.superopsmsb.com/google_containers/pause 3.6

harbor创建仓库

登录harbor仓库,创建一个google_containers的公开仓库

登录仓库

[root@kubernetes-master ~]# docker login kubernetes-register.superopsmsb.com -u shuji

Password: # 输入A12345678a

提交镜像

[root@kubernetes-master ~]# for i in $(docker images | grep -v TAG | awk '{print $1":"$2}');do docker push $i;done

环境初始化

master主机环境初始化

环境初始化命令

kubeadm init --kubernetes-version=1.23.9 \

--apiserver-advertise-address=10.0.0.12 \

--image-repository kubernetes-register.superopsmsb.com/google_containers \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16 \

--ignore-preflight-errors=Swap

参数解析:

--apiserver-advertise-address 要设定为当前集群的master地址,而且必须为ipv4|ipv6地址

由于kubeadm init命令默认去外网获取镜像,这里我们使用--image-repository来指定使用国内镜像

--kubernetes-version选项的版本号用于指定要部署的Kubenretes程序版本,它需要与当前的kubeadm支持的版本保持一致;该参数是必须的

--pod-network-cidr选项用于指定分Pod分配使用的网络地址,它通常应该与要部署使用的网络插件(例如flannel、calico等)的默认设定保持一致,10.244.0.0/16是flannel默认使用的网络;

--service-cidr用于指定为Service分配使用的网络地址,它由kubernetes管理,默认即为10.96.0.0/12;

--ignore-preflight-errors=Swap 如果没有该项,必须保证系统禁用Swap设备的状态。一般最好加上

--image-repository 用于指定我们在安装kubernetes环境的时候,从哪个镜像里面下载相关的docker镜像,如果需要用本地的仓库,那么就用本地的仓库地址即可

环境初始化过程

环境初始化命令

[root@kubernetes-master ~]# kubeadm init --kubernetes-version=1.23.9 \

> --apiserver-advertise-address=10.0.0.12 \

> --image-repository kubernetes-register.superopsmsb.com/google_containers \

> --service-cidr=10.96.0.0/12 \

> --pod-network-cidr=10.244.0.0/16 \

> --ignore-preflight-errors=Swap

# 环境初始化过程

[init] Using Kubernetes version: v1.23.9

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes-master kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 10.0.0.12]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [kubernetes-master localhost] and IPs [10.0.0.12 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [kubernetes-master localhost] and IPs [10.0.0.12 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 11.006830 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.23" in namespace kube-system with the configuration for the kubelets in the cluster

NOTE: The "kubelet-config-1.23" naming of the kubelet ConfigMap is deprecated. Once the UnversionedKubeletConfigMap feature gate graduates to Beta the default name will become just "kubelet-config". Kubeadm upgrade will handle this transition transparently.

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node kubernetes-master as control-plane by adding the labels: [node-role.kubernetes.io/master(deprecated) node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node kubernetes-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: vudfvt.fwpohpbb7yw2qy49

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

# 基本初始化完毕后,需要做的一些事情

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 定制kubernetes的登录权限

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

# 定制kubernetes的网络配置

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

# node节点注册到master节点

kubeadm join 10.0.0.12:6443 --token vudfvt.fwpohpbb7yw2qy49 \

--discovery-token-ca-cert-hash sha256:110b1efec63971fda17154782dc1179fa93ef90a8804be381e5a83a8a7748545

确认效果

未设定权限前操作

[root@kubernetes-master ~]# kubectl get nodes

The connection to the server localhost:8080 was refused - did you specify the right host or port?

设定kubernetes的认证权限

[root@kubernetes-master ~]# mkdir -p $HOME/.kube

[root@kubernetes-master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@kubernetes-master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

再次检测

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master NotReady control-plane,master 4m10s v1.23.9

小结

1.1.6 node环境部署¶

学习目标

这一节,我们从 节点初始化、网络环境配置、小结 三个方面来学习。

节点初始化

简介

对于node节点来说,我们无需对集群环境进行管理,所以不需要安装和部署kubectl软件,其他的正常安装,然后根据master节点的认证通信,我们可以进行节点加入集群的配置。

安装软件

所有node节点都执行如下步骤:

[root@kubernetes-master ~]# for i in {15..17}; do ssh root@10.0.0.$i "yum install kubeadm-1.23.9-0 kubelet-1.23.9-0 -y";done

节点初始化(以node1为例)

节点1进行环境初始化

[root@kubernetes-node1 ~]# kubeadm join 10.0.0.12:6443 --token vudfvt.fwpohpbb7yw2qy49 \

> --discovery-token-ca-cert-hash sha256:110b1efec63971fda17154782dc1179fa93ef90a8804be381e5a83a8a7748545

[preflight] Running pre-flight checks

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

回到master节点主机查看节点效果

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master NotReady control-plane,master 17m v1.23.9

kubernetes-node2 NotReady <none> 2m10s v1.23.9

所有节点都做完后,再次查看master的节点效果

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master NotReady control-plane,master 21m v1.23.9

kubernetes-node1 NotReady <none> 110s v1.23.9

kubernetes-node2 NotReady <none> 6m17s v1.23.9

kubernetes-node3 NotReady <none> 17s v1.23.9

网络环境配置

简介

根据master节点初始化的效果,我们这里需要单独将网络插件的功能实现

插件环境部署

创建基本目录

mkdir /data/kubernetes/flannel -p

cd /data/kubernetes/flannel

获取配置文件

wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

获取相关镜像

[root@kubernetes-master /data/kubernetes/flannel]# grep image kube-flannel.yml | grep -v '#'

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

image: rancher/mirrored-flannelcni-flannel:v0.18.1

image: rancher/mirrored-flannelcni-flannel:v0.18.1

定制镜像标签

for i in $(grep image kube-flannel.yml | grep -v '#' | awk -F '/' '{print $NF}')

do

docker pull rancher/$i

docker tag rancher/$i kubernetes-register.superopsmsb.com/google_containers/$i

docker push kubernetes-register.superopsmsb.com/google_containers/$i

docker rmi rancher/$i

done

备份配置文件

[root@kubernetes-master /data/kubernetes/flannel]# cp kube-flannel.yml{,.bak}

修改配置文件

[root@kubernetes-master /data/kubernetes/flannel]# sed -i '/ image:/s/rancher/kubernetes-register.superopsmsb.com\/google_containers/' kube-flannel.yml

[root@kubernetes-master /data/kubernetes/flannel]# sed -n '/ image:/p' kube-flannel.yml

image: kubernetes-register.superopsmsb.com/google_containers/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

image: kubernetes-register.superopsmsb.com/google_containers/mirrored-flannelcni-flannel:v0.18.1

image: kubernetes-register.superopsmsb.com/google_containers/mirrored-flannelcni-flannel:v0.18.1

应用配置文件

[root@kubernetes-master /data/kubernetes/flannel]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

检查效果

查看集群节点效果

[root@kubernetes-master /data/kubernetes/flannel]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready control-plane,master 62m v1.23.9

kubernetes-node1 Ready <none> 42m v1.23.9

kubernetes-node2 Ready <none> 47m v1.23.9

kubernetes-node3 Ready <none> 41m v1.23.9

查看集群pod效果

[root@kubernetes-master /data/kubernetes/flannel]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5d555c984-gt4w9 1/1 Running 0 62m

coredns-5d555c984-t4gps 1/1 Running 0 62m

etcd-kubernetes-master 1/1 Running 0 62m

kube-apiserver-kubernetes-master 1/1 Running 0 62m

kube-controller-manager-kubernetes-master 1/1 Running 0 62m

kube-proxy-48txz 1/1 Running 0 43m

kube-proxy-5vdhv 1/1 Running 0 41m

kube-proxy-cblk7 1/1 Running 0 47m

kube-proxy-hglfm 1/1 Running 0 62m

kube-scheduler-kubernetes-master 1/1 Running 0 62m

小结

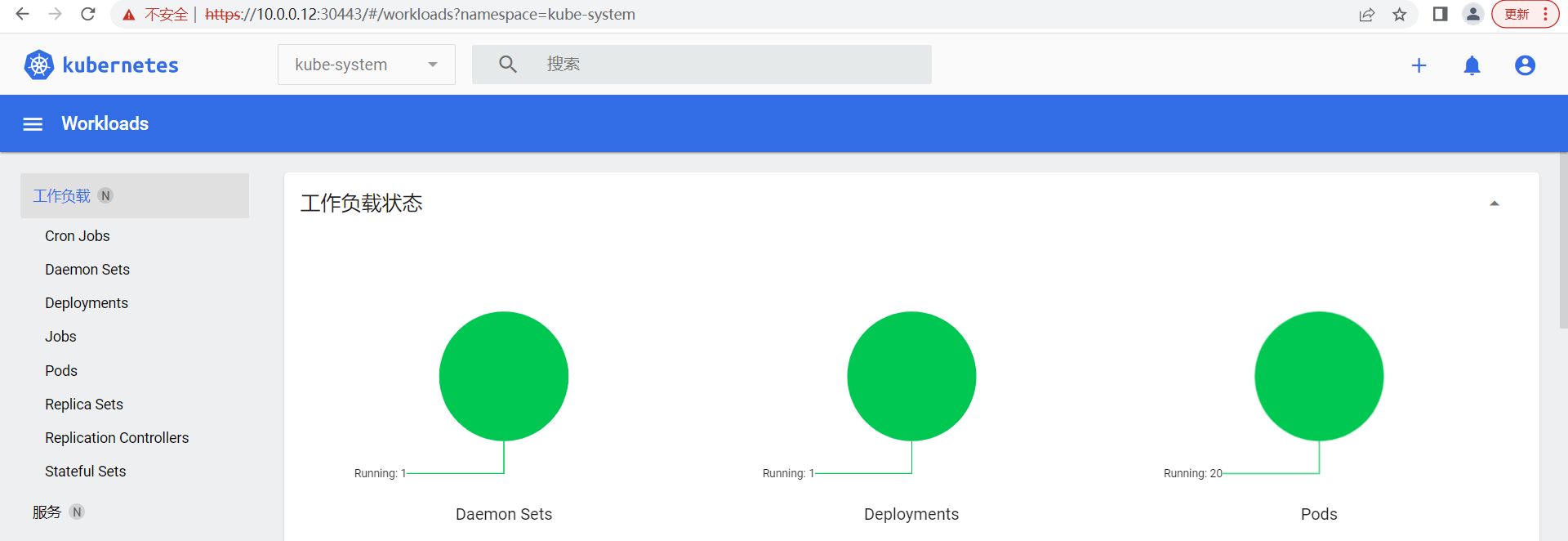

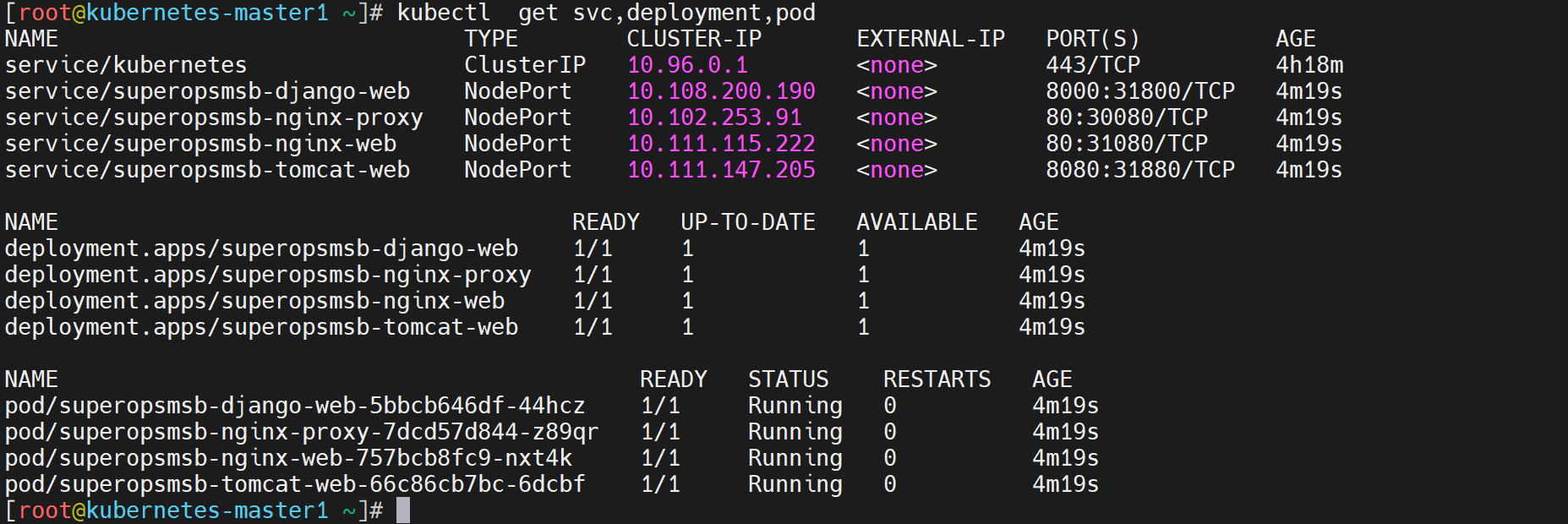

1.1.7 集群环境实践¶

学习目标

这一节,我们从 基础功能、节点管理、小结 三个方面来学习。

基础功能

简介

目前kubernetes的集群环境已经部署完毕了,但是有些基础功能配置还是需要来梳理一下的。默认情况下,我们在master上执行命令的时候,没有办法直接使用tab方式补全命令,我们可以采取下面方式来实现。

命令补全

获取相关环境配置

[root@kubernetes-master ~]# kubectl completion bash

加载这些配置

[root@kubernetes-master ~]# source <(kubectl completion bash)

注意: "<(" 两个符号之间没有空格

放到当前用户的环境文件中

[root@kubernetes-master ~]# echo "source <(kubectl completion bash)" >> ~/.bashrc

[root@kubernetes-master ~]# echo "source <(kubeadm completion bash)" >> ~/.bashrc

[root@kubernetes-master ~]# source ~/.bashrc

测试效果

[root@kubernetes-master ~]# kubectl get n

namespaces networkpolicies.networking.k8s.io nodes

[root@kubernetes-master ~]# kubeadm co

completion config

命令简介

简介

kubectl是kubernetes集群内部管理各种资源对象的核心命令,一般情况下,只有master主机才有

命令帮助

注意:虽然kubernetes的kubectl命令的一些参数发生了变化,甚至是移除,但是不影响旧有命令的正常使用。

[root@kubernetes-master ~]# kubectl --help

kubectl controls the Kubernetes cluster manager.

Find more information at: https://kubernetes.io/docs/reference/kubectl/overview/

Basic Commands (Beginner): 资源对象核心的基础命令

create Create a resource from a file or from stdin

expose Take a replication controller, service, deployment or pod and expose it as a new

Kubernetes service

run 在集群中运行一个指定的镜像

set 为 objects 设置一个指定的特征

Basic Commands (Intermediate): 资源对象的一些基本操作命令

explain Get documentation for a resource

get 显示一个或更多 resources

edit 在服务器上编辑一个资源

delete Delete resources by file names, stdin, resources and names, or by resources and

label selector

Deploy Commands: 应用部署相关的命令

rollout Manage the rollout of a resource

scale Set a new size for a deployment, replica set, or replication controller

autoscale Auto-scale a deployment, replica set, stateful set, or replication controller

Cluster Management Commands: 集群管理相关的命令

certificate 修改 certificate 资源.

cluster-info Display cluster information

top Display resource (CPU/memory) usage

cordon 标记 node 为 unschedulable

uncordon 标记 node 为 schedulable

drain Drain node in preparation for maintenance

taint 更新一个或者多个 node 上的 taints

Troubleshooting and Debugging Commands: 集群故障管理相关的命令

describe 显示一个指定 resource 或者 group 的 resources 详情

logs 输出容器在 pod 中的日志

attach Attach 到一个运行中的 container

exec 在一个 container 中执行一个命令

port-forward Forward one or more local ports to a pod

proxy 运行一个 proxy 到 Kubernetes API server

cp Copy files and directories to and from containers

auth Inspect authorization

debug Create debugging sessions for troubleshooting workloads and nodes

Advanced Commands: 集群管理高阶命令,一般用一个apply即可

diff Diff the live version against a would-be applied version

apply Apply a configuration to a resource by file name or stdin

patch Update fields of a resource

replace Replace a resource by file name or stdin

wait Experimental: Wait for a specific condition on one or many resources

kustomize Build a kustomization target from a directory or URL.

Settings Commands: 集群的一些设置性命令

label 更新在这个资源上的 labels

annotate 更新一个资源的注解

completion Output shell completion code for the specified shell (bash, zsh or fish)

Other Commands: 其他的相关命令

alpha Commands for features in alpha

api-resources Print the supported API resources on the server

api-versions Print the supported API versions on the server, in the form of "group/version"

config 修改 kubeconfig 文件

plugin Provides utilities for interacting with plugins

version 输出 client 和 server 的版本信息

Usage:

kubectl [flags] [options]

Use "kubectl <command> --help" for more information about a given command.

Use "kubectl options" for a list of global command-line options (applies to all commands).

命令的帮助

查看子命令的帮助信息

样式1:kubectl 子命令 --help

样式2:kubectl help 子命令

样式1实践

[root@kubernetes-master ~]# kubectl version --help

Print the client and server version information for the current context.

Examples:

# Print the client and server versions for the current context

kubectl version

...

样式2实践

[root@kubernetes-master ~]# kubectl help version

Print the client and server version information for the current context.

Examples:

# Print the client and server versions for the current context

kubectl version

简单实践

查看当前节点效果

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready control-plane,master 77m v1.23.9

kubernetes-node1 Ready <none> 57m v1.23.9

kubernetes-node2 Ready <none> 62m v1.23.9

kubernetes-node3 Ready <none> 56m v1.23.9

移除节点3

[root@kubernetes-master ~]# kubectl delete node kubernetes-node3

node "kubernetes-node3" deleted

查看效果

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready control-plane,master 77m v1.23.9

kubernetes-node1 Ready <none> 57m v1.23.9

kubernetes-node2 Ready <none> 62m v1.23.9

节点环境重置

[root@kubernetes-node3 ~]# kubeadm reset

[root@kubernetes-node3 ~]# systemctl restart kubelet docker

[root@kubernetes-node3 ~]# kubeadm join 10.0.0.12:6443 --token vudfvt.fwpohpbb7yw2qy49 --discovery-token-ca-cert-hash sha256:110b1efec63971fda17154782dc1179fa93ef90a8804be381e5a83a8a7748545

master节点查看效果

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready control-plane,master 82m v1.23.9

kubernetes-node1 Ready <none> 62m v1.23.9

kubernetes-node2 Ready <none> 67m v1.23.9

kubernetes-node3 Ready <none> 55s v1.23.9

小结

1.1.8 资源对象解读¶

学习目标

这一节,我们从 资源对象、命令解读、小结 三个方面来学习。

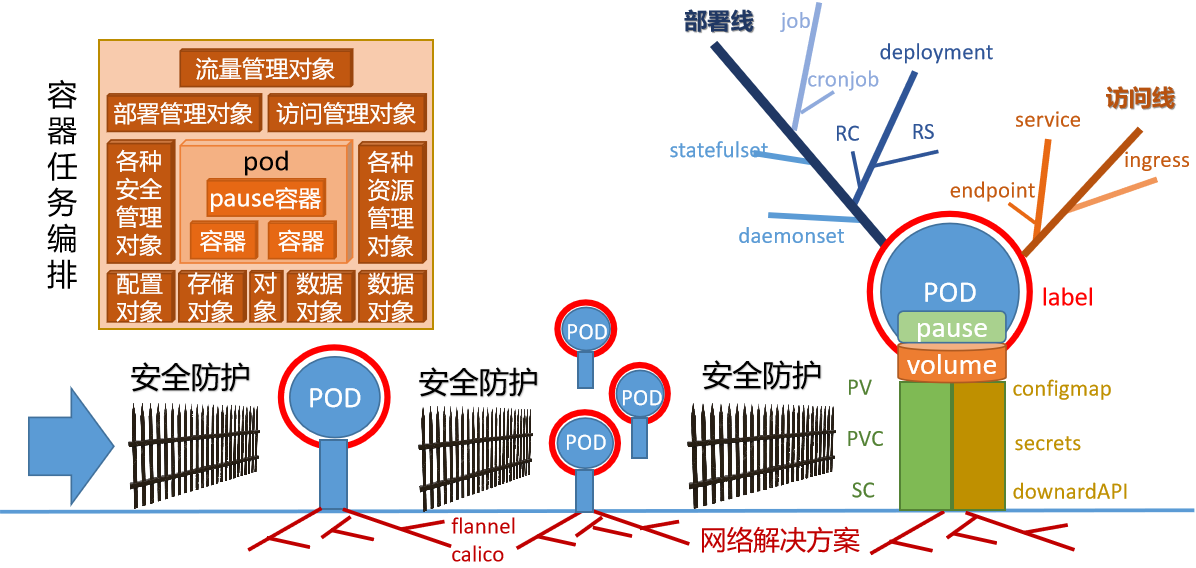

资源对象

简介

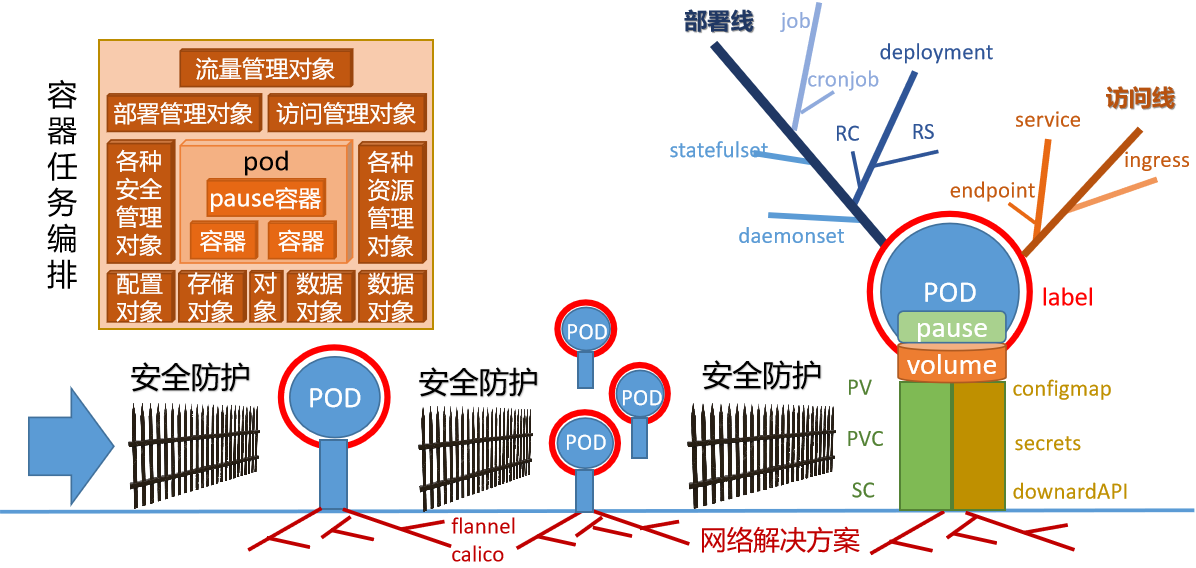

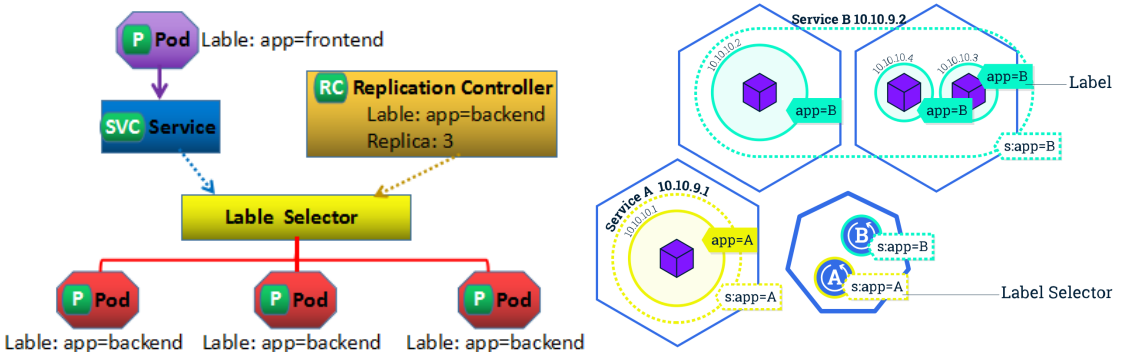

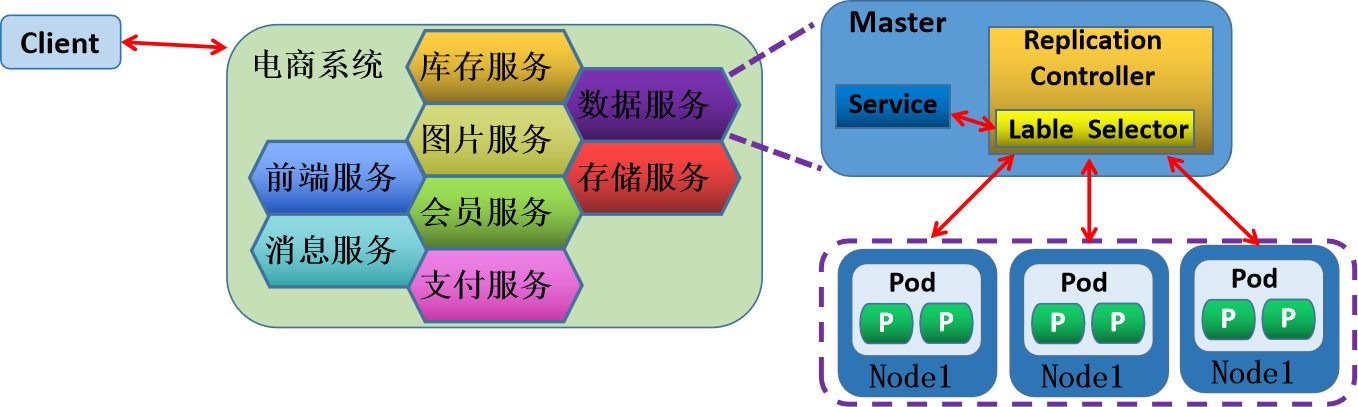

根据之前的对于kubernetes的简介,我们知道kubernetes之所以在大规模的容器场景下的管理效率非常好,原因在于它将我们人工对于容器的手工操作全部整合到的各种资源对象里面。

在进行资源对象解读之前,我们需要给大家灌输一个基本的认识:

kubernetes的资源对象有两种:

默认的资源对象 - 默认的有五六十种,但是常用的也就那么一二十个。

自定义的资源对象 - 用户想怎么定义就怎么定义,数量无法统计,仅需要了解想要了解的即可

常见资源缩写

| 最基础资源对象 | |||

|---|---|---|---|

| 资源对象全称 | 缩写 | 资源对象全称 | 缩写 |

| pod/pods | po | node/nodes | no |

| 最常见资源对象 | |||

| 资源对象全称 | 缩写 | 资源对象全称 | 缩写 |

| replication controllers | rc | horizontal pod autoscalers | hpa |

| replica sets | rs | persistent volume | pv |

| deployment | deploy | persistent volume claims | pvc |

| services | svc | ||

| 其他资源对象 | |||

| namespaces | ns | storage classes | sc |

| config maps | cm | clusters | |

| daemon sets | ds | stateful sets | |

| endpoints | ep | secrets | |

| events | ev | jobs | |

| ingresses | ing |

命令解读

语法解读

命令格式:

kubectl [子命令] [资源类型] [资源名称] [其他标识-可选]

参数解析:

子命令:操作Kubernetes资源对象的子命令,常见的有create、delete、describe、get等

create 创建资源对象 describe 查找资源的详细信息

delete 删除资源对象 get 获取资源基本信息

资源类型:Kubernetes资源类型,举例:结点的资源类型是nodes,缩写no

资源名称: Kubernetes资源对象的名称,可以省略。

其他标识: 可选参数,一般用于信息的扩展信息展示

资源对象基本信息

查看资源类型基本信息

[root@kubernetes-master ~]# kubectl api-resources | head -2

NAME SHORTNAMES APIVERSION NAMESPACED KIND

bindings v1 true Binding

[root@kubernetes-master ~]# kubectl api-resources | grep -v NAME | wc -l

56

查看资源类型的版本信息

[root@kubernetes-master ~]# kubectl api-versions | head -n2

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

常见资源对象查看

获取资源所在命名空间

[root@kubernetes-master ~]# kubectl get ns

NAME STATUS AGE

default Active 94m

kube-flannel Active 32m

kube-node-lease Active 94m

kube-public Active 94m

kube-system Active 94m

获取命名空间的资源对象

[root@kubernetes-master ~]# kubectl get pod

No resources found in default namespace.

[root@kubernetes-master ~]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-5d555c984-gt4w9 1/1 Running 0 149m

coredns-5d555c984-t4gps 1/1 Running 0 149m

etcd-kubernetes-master 1/1 Running 0 149m

kube-apiserver-kubernetes-master 1/1 Running 0 149m

kube-controller-manager-kubernetes-master 1/1 Running 0 149m

kube-proxy-48txz 1/1 Running 0 129m

kube-proxy-cblk7 1/1 Running 0 134m

kube-proxy-ds8x5 1/1 Running 2 (69m ago) 70m

kube-proxy-hglfm 1/1 Running 0 149m

kube-scheduler-kubernetes-master 1/1 Running 0 149m

cs 获取集群组件相关资源

[root@kubernetes-master ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

sa 和 secrets 获取集群相关的用户相关信息

[root@kubernetes-master ~]# kubectl get sa

NAME SECRETS AGE

default 1 150m

[root@kubernetes-master ~]# kubectl get secrets

NAME TYPE DATA AGE

default-token-nc8rg kubernetes.io/service-account-token 3 150m

信息查看命令

查看资源对象

kubectl get 资源类型 资源名称 <-o yaml/json/wide | -w>

参数解析:

-w 是实时查看资源的状态。

-o 是以多种格式查看资源的属性信息

--raw 从api地址中获取相关资源信息

描述资源对象

kubectl describe 资源类型 资源名称

注意:

这个命令非常重要,一般我们应用部署排错时候,就用它。

查看资源应用的访问日志

kubectl logs 资源类型 资源名称

注意:

这个命令非常重要,一般我们服务排错时候,就用它。

查看信息基本信息

基本的资源对象简要信息

[root@kubernetes-master ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

kubernetes-master Ready control-plane,master 157m v1.23.9

kubernetes-node1 Ready <none> 137m v1.23.9

kubernetes-node2 Ready <none> 142m v1.23.9

kubernetes-node3 Ready <none> 75m v1.23.9

[root@kubernetes-master ~]# kubectl get nodes kubernetes-node1

NAME STATUS ROLES AGE VERSION

kubernetes-node1 Ready <none> 137m v1.23.9

查看资源对象的属性信息

[root@kubernetes-master ~]# kubectl get nodes kubernetes-node1 -o yaml

apiVersion: v1

kind: Node

metadata:

annotations:

...

查看资源对象的扩展信息

[root@kubernetes-master ~]# kubectl get nodes kubernetes-node1 -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kubernetes-node1 Ready <none> 139m v1.23.9 10.0.0.15 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 docker://20.10.17

查看资源的描述信息

describe 查看资源对象的描述信息

[root@kubernetes-master ~]# kubectl describe namespaces default

Name: default

Labels: kubernetes.io/metadata.name=default

Annotations: <none>

Status: Active

No resource quota.

No LimitRange resource.

logs 查看对象的应用访问日志

[root@kubernetes-master ~]# kubectl -n kube-system logs kube-proxy-hglfm

I0715 08:35:01.883435 1 node.go:163] Successfully retrieved node IP: 10.0.0.12

I0715 08:35:01.883512 1 server_others.go:138] "Detected node IP" address="10.0.0.12"

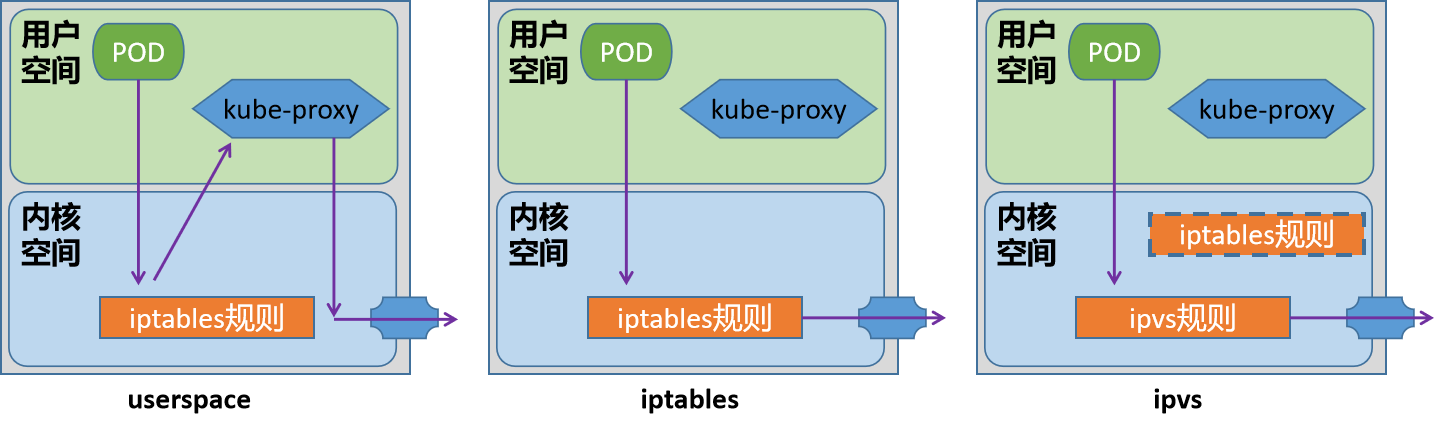

I0715 08:35:01.883536 1 server_others.go:561] "Unknown proxy mode, assuming iptables proxy" proxyMode=""

小结

1.1.9 资源对象实践¶

学习目标

这一节,我们从 命令实践、应用实践、小结 三个方面来学习。

命令实践

准备资源

从网上获取镜像

[root@kubernetes-master ~]# docker pull nginx

镜像打标签

[root@kubernetes-master ~]# docker tag nginx:latest kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0

提交镜像到仓库

[root@kubernetes-master ~]# docker push kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0

删除镜像

[root@kubernetes-master ~]# docker rmi nginx

创建资源对象

创建一个应用

[root@kubernetes-master ~]# kubectl create deployment nginx --image=kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0

查看效果

[root@kubernetes-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f44f65dc-99dvt 1/1 Running 0 13s 10.244.5.2 kubernetes-node3 <none> <none>

访问效果

[root@kubernetes-master ~]# curl 10.244.5.2 -I

HTTP/1.1 200 OK

Server: nginx/1.23.0

为应用暴露流量入口

[root@kubernetes-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

查看基本信息

[root@kubernetes-master ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@kubernetes-master ~]# kubectl get service nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.105.31.160 <none> 80:32505/TCP 8s

注意:

这里的 NodePort 代表在所有的节点主机上都开启一个能够被外网访问的端口 32505

访问效果

[root@kubernetes-master ~]# curl 10.105.31.160 -I

HTTP/1.1 200 OK

Server: nginx/1.23.0

[root@kubernetes-master ~]# curl 10.0.0.12:32505 -I

HTTP/1.1 200 OK

Server: nginx/1.23.0

查看容器基本信息

查看资源的访问日志

[root@kubernetes-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-f44f65dc-99dvt 1/1 Running 0 9m47s

[root@kubernetes-master ~]# kubectl logs nginx-f44f65dc-99dvt

...

10.244.0.0 - - [15/Jul/2022:12:45:57 +0000] "HEAD / HTTP/1.1" 200 0 "-" "curl/7.29.0" "-"

查看资源的详情信息

[root@kubernetes-master ~]# kubectl describe pod nginx-f44f65dc-99dvt

# 资源对象的基本属性信息

Name: nginx-f44f65dc-99dvt

Namespace: default

Priority: 0

Node: kubernetes-node3/10.0.0.17

Start Time: Fri, 15 Jul 2022 20:44:17 +0800

Labels: app=nginx

pod-template-hash=f44f65dc

Annotations: <none>

Status: Running

IP: 10.244.5.2

IPs:

IP: 10.244.5.2

Controlled By: ReplicaSet/nginx-f44f65dc

# pod内部的容器相关信息

Containers:

nginx: # 容器的名称

Container ID: docker://8c0d89c8ab48e02495a2db4a2b2133c86811bd8064f800a16739f9532670d854

Image: kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0

Image ID: docker-pullable://kubernetes-register.superopsmsb.com/superopsmsb/nginx@sha256:33cef86aae4e8487ff23a6ca16012fac28ff9e7a5e9759d291a7da06e36ac958

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 15 Jul 2022 20:44:24 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-7sxtx (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

# pod内部数据相关的信息

Volumes:

kube-api-access-7sxtx:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

# 资源对象在创建过程中遇到的各种信息,这段信息是非常重要的

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 10m default-scheduler Successfully assigned default/nginx-f44f65dc-99dvt to kubernetes-node3

Normal Pulling 10m kubelet Pulling image "kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0"

Normal Pulled 10m kubelet Successfully pulled image "kubernetes-register.superopsmsb.com/superopsmsb/nginx:1.23.0" in 4.335869479s

Normal Created 10m kubelet Created container nginx

Normal Started 10m kubelet Started container nginx

资源对象内部的容器信息

[root@kubernetes-master ~]# kubectl exec -it nginx-f44f65dc-99dvt -- /bin/bash

root@nginx-f44f65dc-99dvt:/# env

KUBERNETES_SERVICE_PORT_HTTPS=443

KUBERNETES_SERVICE_PORT=443

HOSTNAME=nginx-f44f65dc-99dvt

...

修改nginx的页面信息

root@nginx-f44f65dc-99dvt:/# grep -A1 'location /' /etc/nginx/conf.d/default.conf

location / {

root /usr/share/nginx/html;

root@nginx-f44f65dc-99dvt:/# echo $HOSTNAME > /usr/share/nginx/html/index.html

root@nginx-f44f65dc-99dvt:/# exit

exit

访问应用效果

[root@kubernetes-master ~]# curl 10.244.5.2

nginx-f44f65dc-99dvt

[root@kubernetes-master ~]# curl 10.0.0.12:32505

nginx-f44f65dc-99dvt

应用实践

资源的扩容缩容

pod的容量扩充

[root@kubernetes-master ~]# kubectl help scale

调整pod数量为3

[root@kubernetes-master ~]# kubectl scale --replicas=3 deployment nginx

deployment.apps/nginx scaled

查看效果

[root@kubernetes-master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/3 3 1 20m

[root@kubernetes-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-f44f65dc-99dvt 1/1 Running 0 20m 10.244.5.2 kubernetes-node3 <none> <none>

nginx-f44f65dc-rskvx 1/1 Running 0 16s 10.244.2.4 kubernetes-node1 <none> <none>

nginx-f44f65dc-xpkgq 1/1 Running 0 16s 10.244.1.2 kubernetes-node2 <none> <none>

pod的容量收缩

[root@kubernetes-master ~]# kubectl scale --replicas=1 deployment nginx

deployment.apps/nginx scaled

[root@kubernetes-master ~]# kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 1/1 1 1 20m

资源的删除

删除资源有两种方式

方法1:

kubectl delete 资源类型 资源1 资源2 ... 资源n

因为限制了资源类型,所以这种方法只能删除一种资源

方法2:

kubectl delete 资源类型/资源

因为删除对象的时候,指定了资源类型,所以我们可以通过这种资源类型限制的方式同时删除多种类型资源

删除deployment资源

[root@kubernetes-master ~]# kubectl delete deployments nginx

deployment.apps "nginx" deleted

[root@kubernetes-master ~]# kubectl get deployment

No resources found in default namespace.

删除svc资源

[root@kubernetes-master ~]# kubectl delete svc nginx

service "nginx" deleted

[root@kubernetes-master ~]# kubectl get svc nginx

Error from server (NotFound): services "nginx" not found

小结

1.2 多主集群¶

1.2.1 集群环境解读¶

学习目标

这一节,我们从 集群规划、环境解读、小结 三个方面来学习。

集群规划

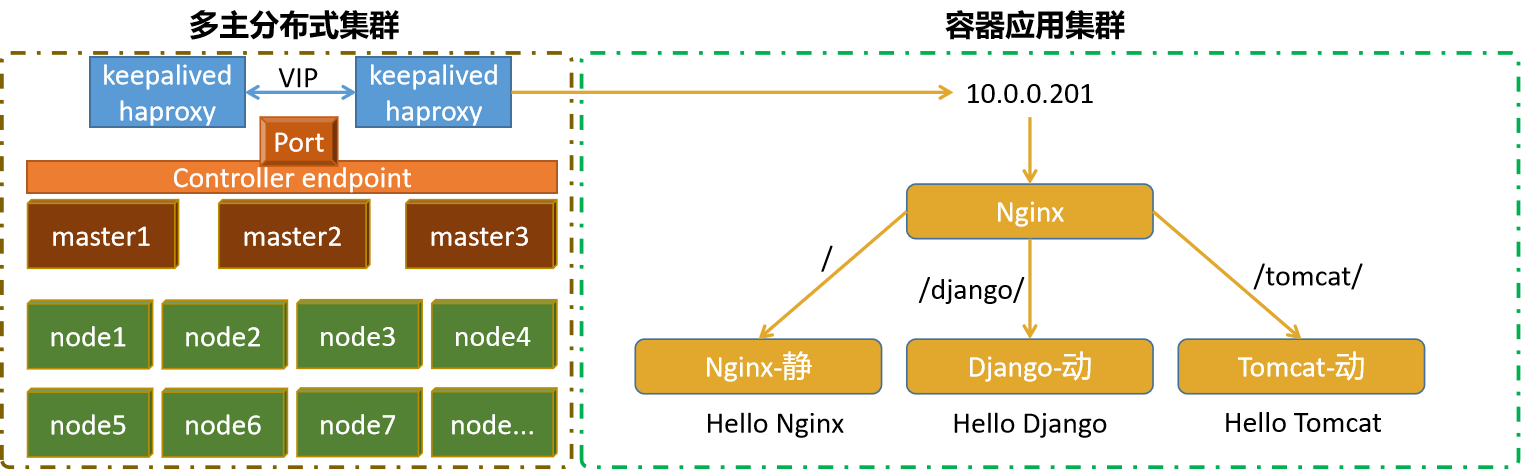

简介

在生产中,因为kubernetes的主角色主机对于整个集群的重要性不可估量,我们的kubernetes集群一般都会采用多主分布式效果。

另外因为大规模环境下,涉及到的资源对象过于繁多,所以,kubernetes集群环境部署的时候,一般会采用属性高度定制的方式来实现。

为了方便后续的集群环境升级的管理操作,我们在高可用的时候,部署 1.23.8的软件版本(当然只需要1.22+版本都可以,不允许出现大跨版本的出现。)

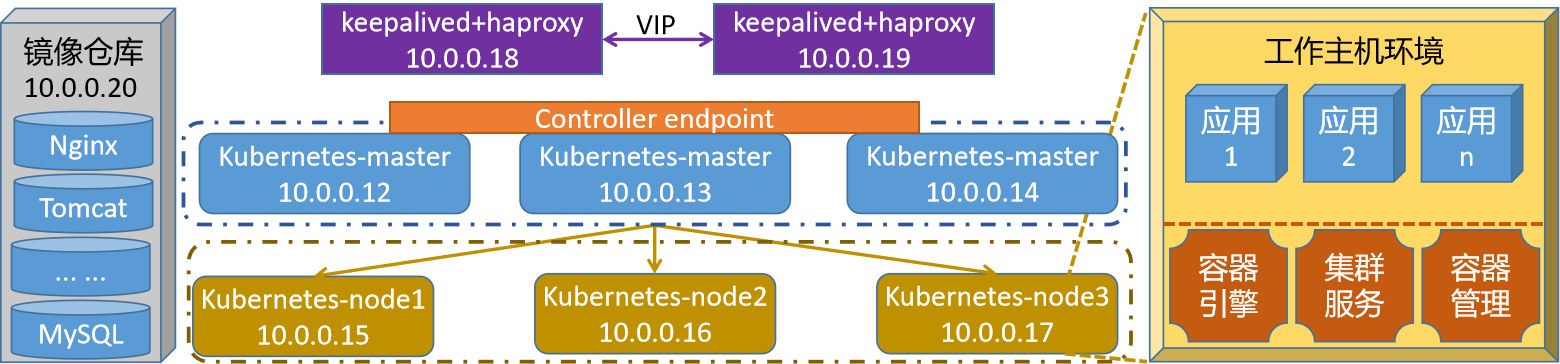

实验环境的效果图

修改master节点主机的hosts文件

[root@localhost ~]# cat /etc/hosts

10.0.0.12 kubernetes-master1.superopsmsb.com kubernetes-master1

10.0.0.13 kubernetes-master2.superopsmsb.com kubernetes-master2

10.0.0.14 kubernetes-master3.superopsmsb.com kubernetes-master3

10.0.0.15 kubernetes-node1.superopsmsb.com kubernetes-node1

10.0.0.16 kubernetes-node2.superopsmsb.com kubernetes-node2

10.0.0.17 kubernetes-node3.superopsmsb.com kubernetes-node3

10.0.0.18 kubernetes-ha1.superopsmsb.com kubernetes-ha1

10.0.0.19 kubernetes-ha2.superopsmsb.com kubernetes-ha2

10.0.0.20 kubernetes-register.superopsmsb.com kubernetes-register

脚本执行实现跨主机免密码认证和hosts文件同步

[root@localhost ~]# /bin/bash /data/scripts/01_remote_host_auth.sh

批量设定远程主机免密码认证管理界面

=====================================================

1: 部署环境 2: 免密认证 3: 同步hosts

4: 设定主机名 5:退出操作

=====================================================

请输入有效的操作id: 1

另外两台master主机的基本配置

kubernetes的内核参数调整

/bin/bash /data/scripts/02_kubernetes_kernel_conf.sh

底层docker环境的部署

/bin/bash /data/scripts/03_kubernetes_docker_install.sh

同步docker环境的基本配置

[root@kubernetes-master ~]# for i in 13 14

> do

> scp /etc/docker/daemon.json root@10.0.0.$i:/etc/docker/daemon.json

> ssh root@10.0.0.$i "systemctl restart docker"

> done

daemon.json 100% 299 86.3KB/s 00:00

daemon.json 100% 299 86.3KB/s 00:00

环境解读

环境组成

多主分布式集群的效果是在单主分布式的基础上,将master主机扩充到3台,作为一个主角色集群来存在,这个时候我们需要考虑数据的接入和输出。

数据接入:

三台master主机需要一个专属的控制平面来统一处理数据来源

- 在k8s中,这入口一般是以port方式存在

数据流出:

在kubernetes集群中,所有的数据都是以etcd的方式来单独存储的,所以这里无序过度干预。

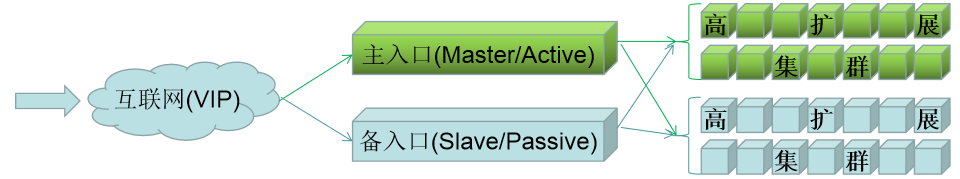

高可用梳理

为了保证所有数据都能够访问master主机,同时避免单台master出现异常,我们一般会通过高可用的方式将流量转交个后端,这里采用常见的 keepalived 和 haproxy的方式来实现。

其他功能

一般来说,对于大型集群来说,其内部的时间同步、域名解析、配置管理、持续交付等功能,都需要单独的主机来进行实现。由于我们这里主要来演示核心的多主集群环境,所以其他的功能,大家可以自由的向当前环境中补充。

小结

1.2.2 高可用环境实践¶

学习目标

这一节,我们从 高可用、高负载、高可用升级、小结 四个方面来学习。

高可用

简介

所谓的高可用,将核心业务使用多台(一般是2台)主机共同工作,支撑并保障核心业务的正常运行,尤其是业务的对外不间断的对外提供服务。核心特点就是"冗余",它存在的目的就是为了解决单点故障(Single Point of Failure)问题的。

高可用集群是基于高扩展基础上的一个更高层次的网站稳定性解决方案。网站的稳定性体现在两个方面:网站可用性和恢复能力

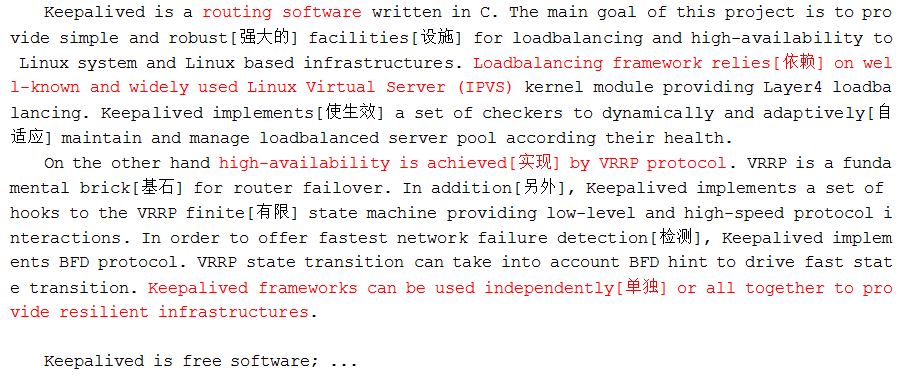

keepalived简介

软件安装

我们在 10.0.0.18和10.0.0.19主机上部署keepalived软件

[root@kubernetes-ha1 ~]# yum install keepalived -y

查看配置文件模板

[root@kubernetes-ha1 ~]# rpm -ql keepalived | grep sample

/usr/share/doc/keepalived-1.3.5/samples

软件配置

10.0.0.19主机配置高可用从节点

[root@kubernetes-ha2 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

[root@kubernetes-ha2 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id kubernetes_ha2

}

vrrp_instance VI_1 {

state BACKUP # 当前节点为高可用从角色

interface eth0

virtual_router_id 51

priority 90

advert_int 1 # 主备通讯时间间隔

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:1

}

}

重启服务后查看效果

[root@kubernetes-ha2 ~]# systemctl restart keepalived.service

[root@kubernetes-ha2 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:50:56:24:cd:0e txqueuelen 1000 (Ethernet)

结果显示:

高可用功能已经开启了

10.0.0.18主机配置高可用主节点

[root@kubernetes-ha1 ~]# cp /etc/keepalived/keepalived.conf{,.bak}

从10.0.0.19主机拉取配置文件

[root@kubernetes-ha1 ~]# scp root@10.0.0.19:/etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf

修改配置文件

[root@kubernetes-ha1 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id kubernetes_ha1

}

vrrp_instance VI_1 {

state MASTER # 当前节点为高可用主角色

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:1

}

}

启动服务

[root@kubernetes-ha1 ~]# systemctl start keepalived.service

[root@kubernetes-ha1 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:50:56:2d:d9:0a txqueuelen 1000 (Ethernet)

[root@kubernetes-ha2 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 00:50:56:24:cd:0e txqueuelen 1000 (Ethernet)

结果显示:

高可用的主节点把从节点的ip地址给夺过来了,实现了节点的漂移

主角色关闭服务

[root@kubernetes-ha1 ~]# systemctl stop keepalived.service

[root@kubernetes-ha1 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 00:50:56:2d:d9:0a txqueuelen 1000 (Ethernet)

从节点查看vip效果

[root@kubernetes-ha2 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:50:56:24:cd:0e txqueuelen 1000 (Ethernet)

主角色把vip抢过来

[root@kubernetes-ha1 ~]# systemctl start keepalived.service

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:50:56:2d:d9:0a txqueuelen 1000 (Ethernet)

注意:

在演示实验环境的时候,如果你的主机资源不足,可以只开一台keepalived

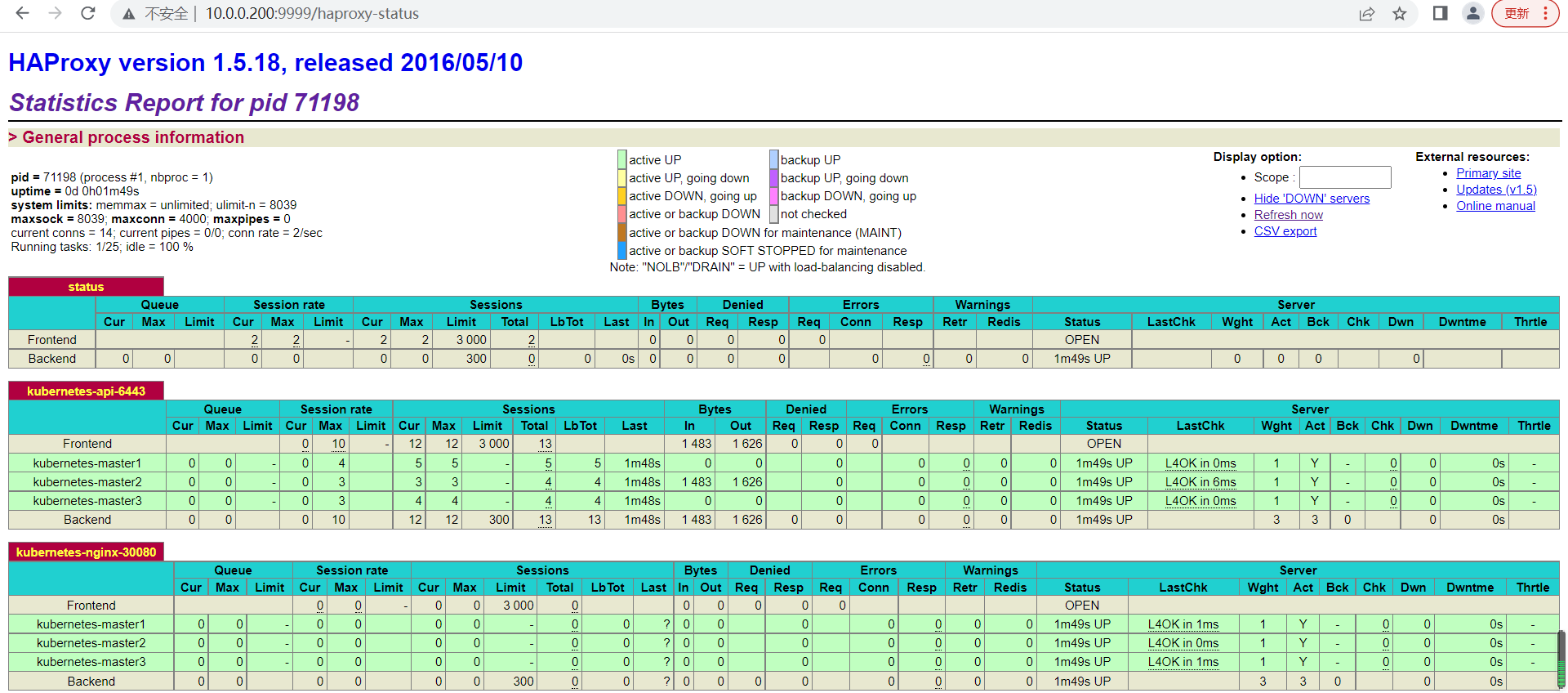

高负载

简介

所谓的高负载集群,指的是在当前业务环境集群中,所有的主机节点都处于正常的工作活动状态,它们共同承担起用户的请求带来的工作负载压力,保证用户的正常访问。支持高可用的软件很多,比如nginx、lvs、haproxy、等,我们这里用的是haproxy。

HAProxy是法国开发者 威利塔罗(Willy Tarreau) 在2000年使用C语言开发的一个开源软件,是一款具备高并发(一万以上)、高性能的TCP和HTTP负载均衡器,支持基于cookie的持久性,自动故障切换,支持正则表达式及web状态统计、它也支持基于数据库的反向代理。

软件安装

我们在 10.0.0.18和10.0.0.19主机上部署haproxy软件

[root@kubernetes-ha1 ~]# yum install haproxy -y

软件配置

10.0.0.18主机配置高负载

[root@kubernetes-ha1 ~]# cp /etc/haproxy/haproxy.cfg{,.bak}

[root@kubernetes-ha1 ~]# cat /etc/haproxy/haproxy.cfg

...

listen status

bind 10.0.0.200:9999

mode http

log global

stats enable

stats uri /haproxy-status

stats auth superopsmsb:123456

listen kubernetes-api-6443

bind 10.0.0.200:6443

mode tcp

server kubernetes-master1 10.0.0.12:6443 check inter 3s fall 3 rise 5

server kubernetes-master2 10.0.0.13:6443 check inter 3s fall 3 rise 5

server kubernetes-master3 10.0.0.14:6443 check inter 3s fall 3 rise 5

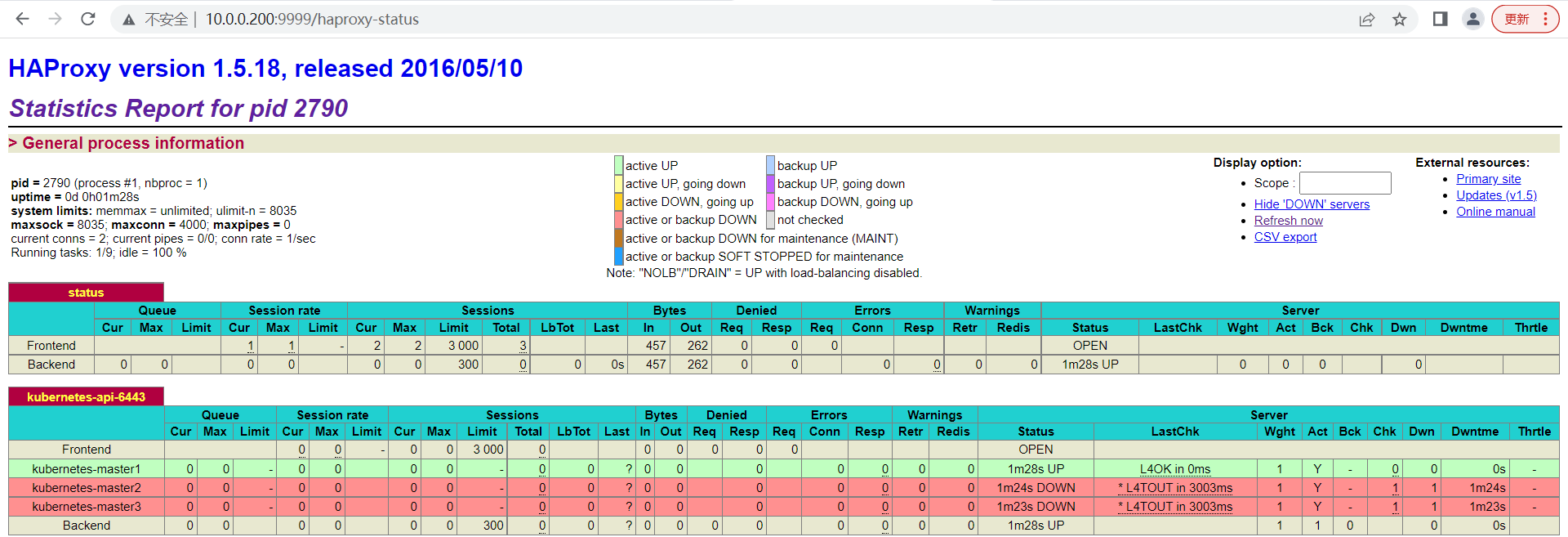

重启服务后查看效果

[root@kubernetes-ha1 ~]# systemctl start haproxy.service

[root@kubernetes-ha1 ~]# netstat -tnulp | head -n4

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.200:6443 0.0.0.0:* LISTEN 2790/haproxy

tcp 0 0 10.0.0.200:9999 0.0.0.0:* LISTEN 2790/haproxy

浏览器查看haproxy的页面效果 10.0.0.200:9999/haproxy-status,输入用户名和密码后效果如下

10.0.0.19主机配置高可用主节点

[root@kubernetes-ha2 ~]# cp /etc/haproxy/haproxy.cfg{,.bak}

把10.0.0.18主机配置传递到10.0.0.19主机

[root@kubernetes-ha1 ~]# scp /etc/haproxy/haproxy.cfg root@10.0.0.19:/etc/haproxy/haproxy.cfg

默认情况下,没有vip的节点是无法启动haproxy的

[root@kubernetes-ha2 ~]# systemctl start haproxy

[root@kubernetes-ha2 ~]# systemctl status haproxy.service

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; disabled; vendor preset: disabled)

Active: failed (Result: exit-code)

...

通过修改内核的方式,让haproxy绑定一个不存在的ip地址从而启动成功

[root@kubernetes-ha2 ~]# sysctl -a | grep nonlocal

net.ipv4.ip_nonlocal_bind = 0

net.ipv6.ip_nonlocal_bind = 0

开启nonlocal的内核参数

[root@kubernetes-ha2 ~]# echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

[root@kubernetes-ha2 ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

注意:

这一步最好在ha1上也做一下

再次启动haproxy服务

[root@kubernetes-ha2 ~]# systemctl start haproxy

[root@kubernetes-ha2 ~]# systemctl status haproxy | grep Active

Active: active (running) since 六 2062-07-16 08:05:00 CST; 14s ago

[root@kubernetes-ha1 ~]# netstat -tnulp | head -n4

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 10.0.0.200:6443 0.0.0.0:* LISTEN 3062/haproxy

tcp 0 0 10.0.0.200:9999 0.0.0.0:* LISTEN 3062/haproxy

主角色关闭服务

[root@kubernetes-ha1 ~]# systemctl stop keepalived.service

[root@kubernetes-ha1 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

ether 00:50:56:2d:d9:0a txqueuelen 1000 (Ethernet)

从节点查看vip效果

[root@kubernetes-ha2 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0

ether 00:50:56:24:cd:0e txqueuelen 1000 (Ethernet)

高可用升级

问题

目前我们实现了高可用的环境,无论keepalived是否在哪台主机存活,都有haproxy能够提供服务,但是在后续处理的时候,会出现一种问题,haproxy一旦故障,而keepalived没有同时关闭的话,会导致服务无法访问。效果如下

所以我们有必要对keeaplived进行升级,需要借助于其内部的脚本探测机制实现对后端haproxy进行探测,如果后端haproxy异常就直接把当前的keepalived服务关闭。

制作keepalived的脚本文件

[root@localhost ~]# cat /etc/keepalived/check_haproxy.sh

#!/bin/bash

# 功能: keepalived检测后端haproxy的状态

# 版本: v0.1

# 作者: 书记

# 联系: superopsmsb.com

# 检测后端haproxy的状态

haproxy_status=$(ps -C haproxy --no-header | wc -l)

# 如果后端没有haproxy服务,则尝试启动一次haproxy

if [ $haproxy_status -eq 0 ];then

systemctl start haproxy >> /dev/null 2>&1

sleep 3

# 如果重启haproxy还不成功的话,则关闭keepalived服务

if [ $(ps -C haproxy --no-header | wc -l) -eq 0 ]

then

systemctl stop keepalived

fi

fi

脚本赋予执行权限

[root@kubernetes-ha1 ~]# chmod +x /etc/keepalived/check_haproxy.sh

改造keepalived的配置文件

查看10.0.0.18主机的高可用配置修改

[root@kubernetes-ha1 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id kubernetes_ha1

}

# 定制keepalive检测后端haproxy服务

vrrp_script chk_haproxy {

script "/bin/bash /etc/keepalived/check_haproxy.sh"

interval 2

weight -20 # 每次检测失败都降低权重值

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

# 应用内部的检测机制

track_script {

chk_haproxy

}

virtual_ipaddress {

10.0.0.200 dev eth0 label eth0:1

}

}

注意:

keepalived所有配置后面不允许有任何空格,否则启动有可能出现异常

重启keepalived服务

[root@kubernetes-ha1 ~]# systemctl restart keepalived

传递检测脚本给10.0.0.19主机

[root@kubernetes-ha1 ~]# scp /etc/keepalived/check_haproxy.sh root@10.0.0.19:/etc/keepalived/check_haproxy.sh

10.0.0.19主机,也将这两部分修改配置添加上,并重启keepalived服务

[root@kubernetes-ha2 ~]# systemctl restart keepalived

服务检测

查看当前vip效果

[root@kubernetes-ha1 ~]# ifconfig eth0:1

eth0:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.0.0.200 netmask 255.255.255.255 broadcast 0.0.0.0